Cataloguing Strategic Innovations and Publications

Designing and Implementing an Enterprise Network for Security, Efficiency, and Resilience

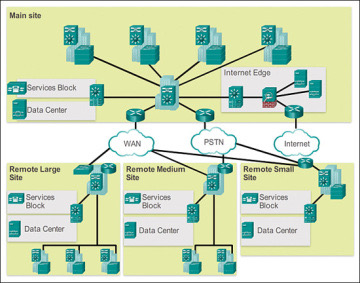

The success of any enterprise depends on the efficiency and security of its network. A well-designed and implemented enterprise network provides the backbone for all the organization's operations, ensuring the seamless flow of data, communication, and collaboration. However, designing and implementing an enterprise network can be a daunting task, with several factors to consider, including security, efficiency, and resilience. In this blog, we will explore the major players in this space and discuss whether to use a single vendor or hybrid network approach.

Major Players in the Enterprise Network Space:

There are several major players in the enterprise network space, including Cisco, Juniper Networks, Hewlett Packard Enterprise, Aruba Networks, and Extreme Networks. These companies provide a range of networking solutions, from switches, routers, firewalls, and wireless access points to network management software.

Single Vendor vs. Hybrid Network Approach:

When designing and implementing an enterprise network, you can either use a single vendor or a hybrid network approach. A single-vendor approach involves using network solutions from one vendor, while a hybrid network approach combines solutions from multiple vendors.

A single-vendor approach can be beneficial in terms of ease of management, as all the solutions come from one vendor, ensuring compatibility and integration. It can also simplify support and maintenance, as you only need to deal with one vendor for all your network needs.

However, a single-vendor approach can also be limiting, as you are limited to the solutions provided by that vendor. You may also be locked into that vendor's pricing and upgrade cycles, limiting your flexibility.

On the other hand, a hybrid network approach provides more flexibility and choice, allowing you to choose the best solutions from multiple vendors to meet your specific needs. It can also be more cost-effective, as you can choose the most cost-effective solution for each aspect of your network.

However, a hybrid network approach can also be more complex, as you need to ensure compatibility and integration between solutions from different vendors. It can also require more support and maintenance, as you may need to deal with multiple vendors for different aspects of your network.

Designing and Implementing an Enterprise Network:

When designing and implementing an enterprise network, you need to consider several factors, including:

- Security: Ensure that your network is secure, with firewalls, intrusion detection and prevention systems, and other security measures in place.

- Efficiency: Design your network for maximum efficiency, with optimized routing, load balancing, and Quality of Service (QoS) features.

- Resilience: Ensure that your network is resilient, with redundant links and backup systems in place to minimize downtime.

- Scalability: Design your network to scale as your organization grows, with the ability to add new devices and services as needed.

Designing and implementing an enterprise network can be a complex and challenging task, but with the right planning and approach, you can ensure maximum security, efficiency, and resilience. Whether you choose a single vendor or hybrid network approach, it's important to consider the major players in this space and select the solutions that best meet your organization's specific needs.

Additionally, it's essential to have a clear understanding of your organization's requirements, such as the number of users, types of devices, and the applications that will be running on the network. This will help you select the appropriate network solutions that can handle your organization's needs.

Furthermore, as the threat landscape continues to evolve, it's important to stay up-to-date with the latest security technologies and best practices. You should regularly assess your network's security posture, perform vulnerability scans and penetration tests, and apply the latest security patches and updates to ensure that your network is protected against the latest threats.

Moreover, it's critical to have a comprehensive disaster recovery plan in place in case of unexpected events such as natural disasters, cyberattacks, or system failures. A disaster recovery plan should include backup systems and data, as well as procedures for restoring critical systems and services.

In conclusion, designing and implementing an enterprise network is a crucial aspect of any organization's success. By ensuring maximum security, efficiency, and resilience, you can provide a solid foundation for all your operations. Whether you choose a single vendor or hybrid network approach, it's essential to carefully consider the major players in this space and select the appropriate solutions that meet your specific needs. Additionally, regularly assessing your network's security posture and having a comprehensive disaster recovery plan in place will help you mitigate risks and ensure business continuity in the face of unexpected events.

Finally, it's important to keep up with industry trends and advancements in network technology. For example, software-defined networking (SDN) and network function virtualization (NFV) are emerging technologies that can bring significant benefits to enterprise networks. SDN enables network administrators to centrally manage and automate network configuration, while NFV allows network services such as firewalls and load balancers to be virtualized and run on commodity hardware, reducing costs and increasing flexibility.

Overall, designing and implementing an enterprise network requires careful planning, consideration of multiple factors, and a continuous focus on improving security, efficiency, and resilience. By staying up-to-date with the latest technologies and best practices, you can ensure that your network remains a strong foundation for your organization's success.

Here are some of the leading companies in the IT Networking and network space, along with a brief list of their key products:

- Cisco Systems - Cisco is one of the world's largest networking companies, providing a range of hardware and software products for network infrastructure, security, collaboration, and cloud computing. Key products include:

- Catalyst switches

- Nexus switches

- ASR routers

- ISR routers

- Meraki wireless access points

- Umbrella cloud security platform

- Juniper Networks - Juniper is a leading provider of network hardware and software solutions, with a focus on high-performance routing, switching, and security. Key products include:

- MX Series routers

- QFX Series switches

- EX Series switches

- SRX Series firewalls

- Contrail SD-WAN

- Huawei - Huawei is a major player in the global networking market, offering a broad portfolio of products for enterprise and carrier customers. Key products include:

- CloudEngine switches

- NetEngine routers

- AirEngine Wi-Fi access points

- HiSecEngine security solutions

- FusionInsight big data platform

- HPE Aruba - HPE Aruba specializes in wireless networking solutions, with a strong focus on mobility and IoT. Key products include:

- ArubaOS switches

- ClearPass network access control

- AirWave network management

- Instant On wireless access points

- Central cloud management platform

- Extreme Networks - Extreme Networks provides a range of network solutions for enterprise and service provider customers, with an emphasis on simplicity and automation. Key products include:

- ExtremeSwitching switches

- ExtremeRouting routers

- ExtremeWireless Wi-Fi access points

- ExtremeControl network access control

- ExtremeCloud cloud management platform

- Fortinet - Fortinet is a leading provider of cybersecurity solutions, including network security, endpoint security, and cloud security. Key products include:

- FortiGate firewalls

- FortiAnalyzer analytics platform

- FortiClient endpoint protection

- FortiWeb web application firewall

- FortiSIEM security information and event management

These are just a few examples of the many companies and products in the IT networking and network space.

Here are some leading companies in the IT networking and network space that offer proxy solutions, along with a brief list of their key products:

- F5 Networks - F5 Networks offers a range of networking and security solutions, including application delivery controllers (ADCs) and web application firewalls (WAFs) that can act as proxies. Key products include:

- BIG-IP ADCs

- Advanced WAF

- SSL Orchestrator

- DNS services

- Silverline cloud-based WAF and DDoS protection

- Symantec (now Norton LifeLock) - Symantec provides a range of security solutions, including web security and cloud access security brokers (CASBs) that can function as proxies. Key products include:

- Web Security Service

- CloudSOC CASB

- ProxySG secure web gateway

- Advanced Secure Gateway

- SSL Visibility Appliance

- Blue Coat (now part of Symantec) - Blue Coat offers a range of proxy solutions for web security and application performance. Key products include:

- ProxySG secure web gateway

- Advanced Secure Gateway

- SSL Visibility Appliance

- WebFilter URL filtering

- MACH5 WAN optimization

- Zscaler - Zscaler provides cloud-based security and networking solutions, including web proxies and secure web gateways. Key products include:

- Zscaler Internet Access (ZIA)

- Zscaler Private Access (ZPA)

- Zscaler Cloud Firewall

- Zscaler Cloud Sandbox

- Zscaler B2B

- Palo Alto Networks - Palo Alto Networks offers a range of security and networking solutions, including a next-generation firewall (NGFW) that can act as a proxy. Key products include:

- Panorama network security management

- NGFWs (PA Series)

- Prisma Access secure access service edge (SASE)

- GlobalProtect remote access VPN

- Cortex XDR extended detection and response

Many companies offer proxy solutions in IT networking. Here are a few of them and a brief overview of their products:

1. Cisco: Cisco offers a range of proxy solutions, including Cisco Web Security, Cisco Cloud Web Security, and Cisco Email Security. These solutions provide advanced threat protection, URL filtering, and data loss prevention features.

2. F5 Networks: F5 Networks offers a range of proxy solutions, including F5 BIG-IP Local Traffic Manager and F5 Advanced Web Application Firewall. These solutions provide load balancing, SSL offloading, and application security features.

3. Blue Coat: Blue Coat offers a range of proxy solutions, including Blue Coat ProxySG and Blue Coat Web Security Service. These solutions provide web filtering, malware protection, and data loss prevention features.

4. Fortinet: Fortinet offers a range of proxy solutions, including FortiGate and FortiWeb. These solutions provide web filtering, SSL inspection, and application security features.

5. Palo Alto Networks: Palo Alto Networks offers a range of proxy solutions, including Palo Alto Networks Next-Generation Firewall and Palo Alto Networks Threat Prevention. These solutions provide advanced threat protection, URL filtering, and data loss prevention features.

When comparing these products, it's important to consider factors such as their feature set, ease of use, scalability, and cost. Some products may be better suited for small businesses, while others may be better suited for large enterprises. Additionally, some products may offer more advanced features than others, such as the ability to inspect encrypted traffic. It's also important to consider the level of support offered by each vendor, as well as their track record in terms of security and reliability. Ultimately, the best proxy solution will depend on the specific needs of the organization and the budget available.

It's difficult to provide a comprehensive comparison of all the products from the different companies mentioned, as each solution has its strengths and weaknesses depending on specific use cases and requirements. However, here are some general pros and cons of the products mentioned:

- Cisco Systems

- Pros: Cisco is a market leader in the networking space, with a broad range of hardware and software solutions to meet various needs. Their products are known for their reliability, scalability, and interoperability. Cisco is also investing in emerging technologies such as software-defined networking (SDN) and the Internet of Things (IoT).

- Cons: Cisco's products can be expensive, and their licensing models can be complex. Customers may require specialized expertise to configure and maintain Cisco solutions, which can add to the overall cost.

- Juniper Networks

- Pros: Juniper is a leading provider of high-performance networking solutions, with a strong focus on security. Their products are known for their reliability and scalability, and Juniper has a reputation for innovation in areas such as software-defined networking (SDN) and automation. Juniper's solutions are also designed to integrate well with other vendors' products.

- Cons: Juniper's product portfolio may be more limited compared to competitors such as Cisco. Some customers may also find Juniper's CLI (command-line interface) less intuitive compared to other vendors' GUI (graphical user interface).

- Huawei

- Pros: Huawei offers a comprehensive portfolio of networking products, including hardware, software, and services. They are known for their competitive pricing and innovation, particularly in emerging markets. Huawei's solutions are designed to be easy to use and manage, with a focus on user experience.

- Cons: Huawei has faced scrutiny from some governments over security concerns, which may be a factor in some customers' purchasing decisions. In addition, some customers may have concerns about the quality and reliability of Huawei's products, particularly in comparison to established market leaders.

- HPE Aruba

- Pros: HPE Aruba is a leading provider of wireless networking solutions, with a strong focus on mobility and IoT. Their products are designed to be easy to use and manage, with a focus on user experience. HPE Aruba also offers a range of cloud-based solutions for centralized management and analytics.

- Cons: HPE Aruba's product portfolio may be more limited compared to competitors such as Cisco. Some customers may also find their pricing less competitive compared to other vendors.

- Extreme Networks

- Pros: Extreme Networks offers a range of networking solutions for enterprise and service provider customers, with an emphasis on simplicity and automation. Their products are designed to be easy to use and manage, with a focus on user experience. Extreme Networks also offers cloud-based solutions for centralized management and analytics.

- Cons: Extreme Networks' product portfolio may be more limited compared to competitors such as Cisco. Some customers may also find their pricing less competitive compared to other vendors.

- Fortinet

- Pros: Fortinet is a leading provider of cybersecurity solutions, with a range of products for network security, endpoint security, and cloud security. Their products are designed to be easy to use and manage, with a focus on integration and automation. Fortinet is also investing in emerging technologies such as artificial intelligence (AI) and machine learning (ML).

- Cons: Some customers may find Fortinet's solutions less scalable compared to other vendors. In addition, Fortinet's licensing models can be complex, which may add to the overall cost.

- F5 Networks

- Pros: F5 Networks is a leading provider of application delivery and security solutions, including web application firewalls (WAFs) and proxies. Their products are known for their performance, scalability, and security. F5 Networks is also investing in emerging technologies such as cloud-native

Here is a comparison of some popular proxy products in the market:

- Squid Proxy

- Pros: Squid is a free and open-source proxy server that is widely used for caching web content and improving network performance. It is highly configurable and supports a range of protocols, including HTTP, HTTPS, FTP, and more. Squid is also compatible with many operating systems and can be used in various deployment scenarios.

- Cons: Squid may require more technical expertise to set up and configure compared to commercial solutions. It also may not have as many advanced features as some commercial products.

- Nginx

- Pros: Nginx is a high-performance web server and reverse proxy that is widely used in production environments. It is known for its scalability, reliability, and speed. Nginx can be used as a standalone proxy or in conjunction with other web servers such as Apache. It also supports a wide range of protocols and can be used for load balancing and content caching.

- Cons: Nginx may require more technical expertise to set up and configure compared to other commercial products. Some users may also find the documentation to be less comprehensive compared to other solutions.

- HAProxy

- Pros: HAProxy is a popular open-source load balancer and reverse proxy server that is known for its high performance and reliability. It supports a range of protocols, including TCP, HTTP, and HTTPS, and can be used for load balancing, content caching, and more. HAProxy also has an active user community and a comprehensive documentation repository.

- Cons: HAProxy may require more technical expertise to set up and configure compared to some commercial products. It may also not have as many advanced features as some other solutions.

- F5 BIG-IP

- Pros: F5 BIG-IP is a commercial proxy server that is widely used in enterprise environments. It is known for its advanced features such as traffic management, application security, and content caching. F5 BIG-IP can be used to optimize network traffic, improve application performance, and provide high availability for applications.

- Cons: F5 BIG-IP may be more expensive compared to other solutions, and may require specialized expertise to configure and maintain. Some users may also find the licensing model to be complex.

- Blue Coat ProxySG

- Pros: Blue Coat ProxySG is a commercial proxy server that is widely used in enterprise environments. It is known for its advanced features such as web filtering, application visibility and control, and threat protection. Blue Coat ProxySG can be used to secure and optimize network traffic, improve application performance, and provide advanced security features such as malware protection and DLP.

- Cons: Blue Coat ProxySG may be more expensive compared to other solutions, and may require specialized expertise to configure and maintain. Some users may also find the licensing model to be complex.

It's difficult to determine which company is the safest to partner with for networking and proxy needs, as each company has its strengths and weaknesses.

That being said, when selecting a company to partner with, it's important to consider factors such as the company's reputation, track record, and level of customer support. It's also important to consider the specific needs of your organization and whether the company's products and services align with those needs.

Some well-known companies in the networking and proxy space with strong reputations and a history of providing reliable and secure products and services include Cisco, Juniper Networks, Palo Alto Networks, and Fortinet. However, it's important to conduct thorough research and evaluation before deciding to ensure that the chosen partner meets your specific needs and security requirements.

When it comes to product stability and reliability for networking and proxy needs, several companies have a strong reputation for providing high-quality products and services. Here are a few examples:

- Cisco: Cisco is one of the most well-known companies in the networking space, and is known for its high-quality routers, switches, firewalls, and other networking equipment. Cisco's products are known for their reliability, performance, and security, and the company has a strong reputation for providing excellent customer support.

- Juniper Networks: Juniper Networks is another well-known company in the networking space, and is known for its high-performance routers, switches, and security products. Juniper's products are known for their stability and reliability, and the company has a strong focus on security, making it a good choice for organizations that prioritize security.

- Palo Alto Networks: Palo Alto Networks is a company that specializes in network security and is known for its firewalls, security appliances, and other security products. Palo Alto's products are known for their reliability and performance, and the company has a strong focus on threat prevention and detection, making it a good choice for organizations that need strong security protections.

- Fortinet: Fortinet is another company that specializes in network security, and is known for its firewalls, security appliances, and other security products. Fortinet's products are known for their reliability and performance, and the company has a strong focus on threat prevention and detection, making it a good choice for organizations that prioritize security.

All of these companies have a strong reputation for providing stable and reliable products and services for networking and proxy needs. The best company to partner with will depend on the specific needs and priorities of your organization, so it's important to evaluate each company carefully and choose the one that is the best fit for your needs.

It is possible to pick products from different companies to establish a network. Many organizations choose to do this to build a network that best fits their specific needs and requirements.

When selecting products from different companies, it's important to ensure that they are compatible with each other and will work together seamlessly. This may require additional configuration and integration work to ensure that the various products can communicate and share information effectively.

It's also important to ensure that the products selected from each company meet the security and performance requirements of the organization. This may involve researching each product and evaluating its capabilities, features, and security protocols.

In summary, it is possible to mix and match products from different companies to establish a network, but it's important to ensure that the products selected are compatible with each other and meet the needs and requirements of the organization.

A Comprehensive Guide to Cisco Network Protocols: From Basics to Advanced

Sanjay K Mohindroo

In today's technologically advanced world, networking plays a vital role in the success of any business or organization. Cisco, as one of the world's leading networking technology providers, offers a wide range of network protocols that are essential for managing and maintaining a network. This guide will provide an in-depth understanding of Cisco network protocols for IT leaders and networking professionals who want to gain a comprehensive knowledge of Cisco networking. In this article, we will list and explain the various Cisco network protocols that IT networking folks and IT leadership need to be aware of.

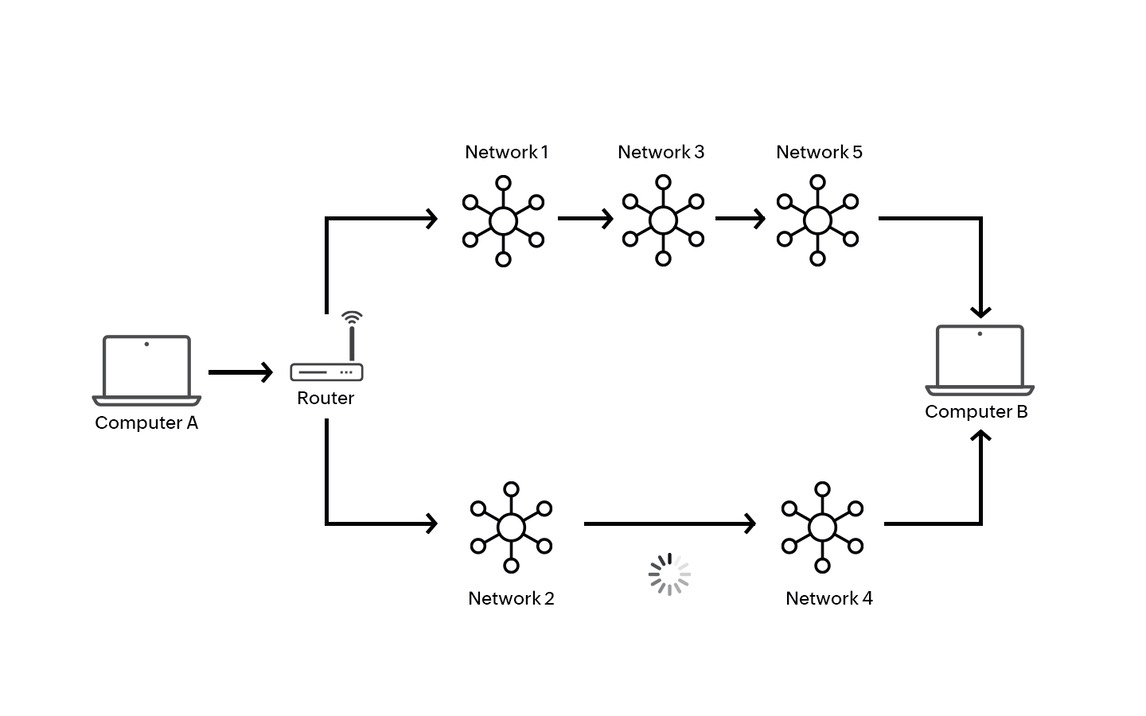

- Routing Protocols: Routing protocols are used to enable routers to communicate with each other and determine the best path for data transmission. Cisco offers three primary routing protocols:

- Routing Information Protocol (RIP): a distance-vector protocol that sends the entire routing table to its neighbors every 30 seconds.

- Open Shortest Path First (OSPF): a link-state protocol that exchanges link-state advertisements to construct a topology map of the network.

- Enhanced Interior Gateway Routing Protocol (EIGRP): a hybrid protocol that uses both distance-vector and link-state algorithms to determine the best path.

- Switching Protocols: Switching protocols are used to forward data packets between different network segments. Cisco offers two primary switching protocols:

- Spanning Tree Protocol (STP): a protocol that prevents network loops by shutting down redundant links.

- VLAN Trunking Protocol (VTP): a protocol that synchronizes VLAN configuration information between switches.

- Wide Area Network (WAN) Protocols: WAN protocols are used to connect remote networks over a wide geographic area. Cisco offers two primary WAN protocols:

- Point-to-Point Protocol (PPP): a protocol used to establish a direct connection between two devices over a serial link.

- High-Level Data Link Control (HDLC): a protocol used to encapsulate data over serial links.

- Security Protocols: Security protocols are used to secure the network and prevent unauthorized access. Cisco offers two primary security protocols:

- Internet Protocol Security (IPSec): a protocol used to secure IP packets by encrypting them.

- Secure Sockets Layer (SSL): a protocol used to establish a secure connection between a client and a server.

- Multi-Protocol Label Switching (MPLS): MPLS is a protocol used to optimize the speed and efficiency of data transmission. It uses labels to forward packets instead of IP addresses, which allows for faster routing and less congestion on the network.

- Border Gateway Protocol (BGP): BGP is a protocol used to route data between different autonomous systems (AS). It is commonly used by internet service providers to exchange routing information.

- Hot Standby Router Protocol (HSRP): HSRP is a protocol used to provide redundancy for IP networks. It allows for two or more routers to share a virtual IP address, so if one router fails, the other can take over seamlessly.

- Quality of Service (QoS): QoS is a protocol used to prioritize network traffic to ensure that important data receives the necessary bandwidth. It is commonly used in voice and video applications to prevent latency and ensure high-quality transmission.

In conclusion, Cisco network protocols are essential for maintaining and managing a network. Understanding these protocols is crucial for IT networking folks and IT leadership. By implementing Cisco network protocols, organizations can ensure that their network is secure, efficient, and reliable. Relevant examples and case studies can provide a better understanding of how these protocols work in real-life scenarios.

Examples of some of the Cisco network protocols mentioned in the article.

- Routing Protocols: Let's take a look at OSPF, which is a link-state protocol that exchanges link-state advertisements to construct a topology map of the network. This protocol is commonly used in enterprise networks to provide faster convergence and better scalability. For example, if there is a link failure in the network, OSPF routers can quickly recalculate the best path and update the routing table, ensuring that data is still transmitted efficiently.

- Switching Protocols: The Spanning Tree Protocol (STP) is a protocol that prevents network loops by shutting down redundant links. It works by selecting a single active path and blocking all other redundant paths, ensuring that there is no possibility of a loop forming. For example, if there are two switches connected via multiple links, STP will identify the shortest path and block the other links to prevent broadcast storms or other network issues.

- Wide Area Network (WAN) Protocols: The Point-to-Point Protocol (PPP) is a protocol used to establish a direct connection between two devices over a serial link. PPP provides authentication, compression, and error detection features, making it an ideal protocol for connecting remote sites. For example, if a company has a branch office in a remote location, it can use PPP to connect the remote site to the main office via a leased line.

- Security Protocols: Internet Protocol Security (IPSec) is a protocol used to secure IP packets by encrypting them. This protocol provides confidentiality, integrity, and authentication features, making it an ideal choice for securing data transmissions over the Internet. For example, if an organization needs to send sensitive data over the internet, it can use IPSec to encrypt the data, ensuring that it cannot be intercepted or modified by unauthorized parties.

- Quality of Service (QoS): QoS is a protocol used to prioritize network traffic to ensure that important data receives the necessary bandwidth. For example, if an organization is running voice and video applications on their network, they can use QoS to prioritize that traffic over other less critical traffic, ensuring that no latency or jitter could impact the quality of the transmission.

These are just a few examples of how Cisco network protocols work in real-life scenarios. By understanding how these protocols work and implementing them correctly, organizations can ensure that their network is secure, efficient, and reliable.

Here are some examples of how organizations can implement Cisco network protocols in their network infrastructure:

- Routing Protocols: An organization can implement the OSPF protocol by configuring OSPF on their routers and setting up areas to control network traffic. They can also use OSPF to optimize the path selection between routers by configuring the cost of the links based on bandwidth and delay.

- Switching Protocols: To implement the Spanning Tree Protocol (STP), an organization can configure STP on their switches to prevent network loops. They can also use Rapid Spanning Tree Protocol (RSTP) or Multiple Spanning Tree Protocol (MSTP) to reduce the convergence time and improve network performance.

- Wide Area Network (WAN) Protocols: An organization can implement the Point-to-Point Protocol (PPP) by configuring PPP on their routers to establish a direct connection between two devices over a serial link. They can also use PPP with authentication, compression, and error detection features to improve the security and efficiency of their network.

- Security Protocols: To implement the Internet Protocol Security (IPSec) protocol, an organization can configure IPSec on their routers and firewalls to encrypt data transmissions and provide secure communication over the Internet. They can also use IPSec with digital certificates to authenticate users and devices.

- Quality of Service (QoS): An organization can implement Quality of Service (QoS) by configuring QoS on their switches and routers to prioritize network traffic. They can also use QoS with Differentiated Services Code Point (DSCP) to classify and prioritize traffic based on its type and importance.

These are just a few examples of how organizations can implement Cisco network protocols in their network infrastructure. By implementing these protocols correctly, organizations can ensure that their network is secure, efficient, and reliable, providing the necessary tools to support business-critical applications and services.

Here's a tutorial on how to configure some of the Cisco network protocols on routers and switches.

- Routing Protocols:

Step 1: Enable the routing protocol on the router by entering the configuration mode using the "configure terminal" command.

Step 2: Configure the router ID and set up the interfaces by using the "router ospf" command.

Step 3: Set up the areas to control network traffic by using the "area" command.

Step 4: Configure the cost of the links based on bandwidth and delay by using the "ip ospf cost" command.

Step 5: Verify the OSPF configuration by using the "show ip ospf" command.

- Switching Protocols:

Step 1: Enable the Spanning Tree Protocol (STP) on the switch by entering the configuration mode using the "configure terminal" command.

Step 2: Configure the bridge priority by using the "spanning-tree vlan [vlan-id] root" command.

Step 3: Set up the port priority and cost by using the "spanning-tree vlan [vlan-id] [port-id] priority [priority]" and "spanning-tree vlan [vlan-id] [port-id] cost [cost]" commands.

Step 4: Verify the STP configuration by using the "show spanning tree" command.

- Wide Area Network (WAN) Protocols:

Step 1: Enable the Point-to-Point Protocol (PPP) on the router by entering the configuration mode using the "configure terminal" command.

Step 2: Configure the PPP authentication by using the "ppp authentication [chap/pap]" command.

Step 3: Set up the PPP options by using the "ppp multilink" and "ppp multilink fragment delay [delay]" commands.

Step 4: Verify the PPP configuration by using the "show ppp multilink" command.

- Security Protocols:

Step 1: Enable the Internet Protocol Security (IPSec) protocol on the router or firewall by entering the configuration mode using the "configure terminal" command.

Step 2: Configure the crypto map and set up the IP address and the pre-shared key by using the "crypto map [map-name] [seq-num] ipsec-isakmp" and "set peer [peer-address] key [pre-shared-key]" commands.

Step 3: Configure the access list to specify the traffic to be encrypted by using the "access-list [acl-number] permit [source-address] [destination-address]" command.

Step 4: Verify the IPSec configuration by using the "show crypto map" command.

- Quality of Service (QoS):

Step 1: Enable the Quality of Service (QoS) on the switch or router by entering the configuration mode using the "configure terminal" command.

Step 2: Set up the class map and the match criteria by using the "class-map [class-name]" and "match [criteria]" commands.

Step 3: Configure the policy map and the traffic shaping by using the "policy-map [policy-name]" and "shape average [bandwidth]" commands.

Step 4: Apply the QoS policy to the interface by using the "service-policy output [policy-name]" command.

Step 5: Verify the QoS configuration by using the "show policy-map interface [interface-name]" command.

These are just some basic steps for configuring some of the Cisco network protocols on routers and switches. Depending on your specific network requirements, you may need to configure additional settings or features. Always make sure to test and verify your configuration to ensure that it is working as expected.

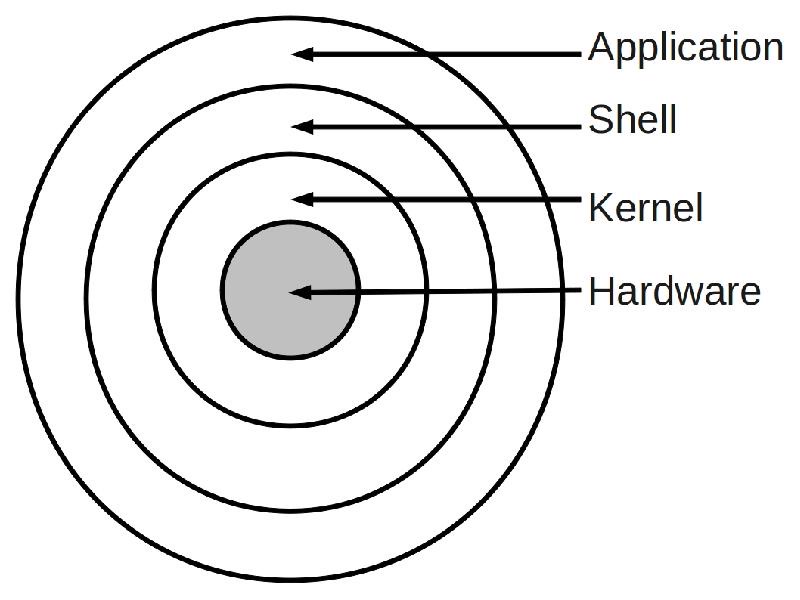

Cisco's networking devices, such as routers and switches, run on an operating system (OS) called Cisco IOS (Internetwork Operating System). IOS is a proprietary OS that is specifically designed to run on Cisco's networking hardware.

IOS provides the networking infrastructure with a wide range of features, including routing, switching, and security protocols. It also includes a command-line interface (CLI) that allows administrators to configure and manage the device's settings.

One of the key advantages of IOS is its modular design, which allows administrators to customize the OS by adding or removing specific features as needed. This modularity provides flexibility in managing the network devices and helps to keep the OS streamlined and efficient.

In addition to the traditional IOS, Cisco also offers a range of specialized operating systems for specific devices or environments, such as IOS XR for service provider networks or IOS XE for enterprise networks.

Overall, IOS is a reliable and flexible operating system that has been proven to be effective in managing and securing Cisco's networking devices. Its CLI is widely used by network administrators around the world, making it a valuable tool for managing networks of all sizes and complexities.

I can provide an extensive list of all the commands used in Cisco IOS, but it would not be feasible to explain every command in detail as it would require a significant amount of time and space. Instead, I can provide an overview of some of the essential commands used in Cisco IOS and their functions:

- enable: This command enables privileged mode, which provides access to configuration commands and advanced system settings.

- show: This command displays information about the system configuration, interface status, and device performance.

- configure terminal: This command enters global configuration mode, which allows users to configure the device settings and protocols.

- interface: This command configures individual interfaces on the device, such as Ethernet or serial ports.

- ip address: This command assigns IP addresses to network interfaces.

- hostname: This command sets the device hostname, which is used for identification purposes.

- ping: This command sends ICMP packets to test network connectivity.

- traceroute: This command traces the path that packets take from the source to the destination.

- Access list: This command configures access control lists to control traffic flow and filter packets.

- shutdown: This command disables an interface or a protocol.

- reload: This command reloads the device, which is useful for resetting configurations or troubleshooting issues.

These commands are just a few examples of the many commands available in Cisco IOS. Each command has specific syntax and options, and it's essential to use them correctly to avoid configuration errors and network issues. Cisco provides extensive documentation and training resources for users to learn about the commands and their functions. Network administrators must understand the basics of IOS commands to effectively manage and maintain their networks.

The Evolution and Future of Networking: Connecting the World

Networking has revolutionized the way we connect. From the telegraph to 5G, we explore its history, present, and future.

In today's digital age, networking has become an integral part of our daily lives. From social media platforms to online banking, we rely on networks to connect us to people and information from around the world. In this blog, we will discuss the history, evolution, present stage, and future of networking, and how it has impacted individuals, businesses, and governments.

The evolution of networking has been one of the most transformative technological advancements in modern history. Today, networking plays an integral role in the way we communicate, conduct business, and connect. In this blog, we will explore the history, evolution, present stage, and future of networking, and highlight how networks are part of our daily lives.

History and Evolution

Networking has come a long way since the early days of communication. In the late 1800s, telegraph systems were used to transmit messages across long distances. This was followed by the telephone, which revolutionized communication by allowing people to talk to each other in real time, regardless of their location. In the mid-20th century, computer networks were developed, allowing computers to communicate with each other over long distances.

The advent of the internet in the 1990s brought about a new era of networking. The internet allowed people to connect on a global scale, and the development of the World Wide Web made it easy to access information and services from anywhere in the world. The growth of social media platforms, mobile devices, and cloud computing has further accelerated the evolution of networking.

Present Stage

Today, networking is an essential part of our daily lives. We use networks to communicate with friends and family, access information and entertainment, conduct business, and even control our homes. Social media platforms like Facebook, Twitter, and Instagram allow us to connect with people around the world and share our experiences. Mobile devices like smartphones and tablets have made it easy to stay connected on the go, while cloud computing has made it possible to access data and services from anywhere in the world.

Businesses and governments also rely heavily on networking to operate efficiently. Networks allow businesses to connect with customers, partners, and suppliers around the world, and to collaborate on projects in real time. Governments use networks to communicate with citizens, manage infrastructure, and provide essential services like healthcare and education.

Future

The future of networking is bright, with new technologies promising to bring about even more transformative changes. One of the most exciting developments is the emergence of 5G networks, which will offer faster speeds, lower latency, and greater reliability than current networks. This will enable new applications like autonomous vehicles, virtual and augmented reality, and smart cities.

Other emerging technologies like the Internet of Things (IoT), artificial intelligence (AI), and blockchain are also poised to revolutionize networking. IoT devices will enable the creation of smart homes, smart cities, and even smart factories, while AI will help us better manage and analyze the vast amounts of data generated by these devices. Blockchain technology, on the other hand, will enable secure and transparent transactions between parties, without the need for intermediaries.

Conclusion

In conclusion, networking has come a long way since its early days, and it has become an essential part of our daily lives. Networks have helped individuals connect, businesses operate efficiently, and governments provide essential services. As we look to the future, new technologies promise to bring about even more transformative changes, and it is an exciting time to be part of the networking industry.

The advancements in networking technology have made our lives simpler and more effective. They have enabled us to access information, communicate with each other, and carry out transactions from anywhere in the world. Networking technology has also facilitated the growth of e-commerce, allowing businesses to sell products and services online and reach customers across the globe.

In addition, networking technology has made it easier to work remotely and collaborate with colleagues in different locations. This has become particularly important in the wake of the COVID-19 pandemic, which has forced many businesses and individuals to work from home.

The benefits of networking technology are not limited to individuals and businesses. Governments have also been able to leverage networking technology to provide essential services to citizens. For example, telemedicine has made it possible for people to receive medical care remotely, while e-learning platforms have made education more accessible to people around the world.

There are many different types of networks, but some of the most common ones include:

- Local Area Network (LAN): A LAN is a network that connects devices in a small geographical area, such as a home, office, or school. It typically uses Ethernet cables or Wi-Fi to connect devices and is often used for file sharing and printing.

- Wide Area Network (WAN): A WAN is a network that connects devices across a larger geographical area, such as multiple cities or even countries. It uses a combination of technologies such as routers, switches, and leased lines to connect devices and is often used for communication between different branches of a company.

- Metropolitan Area Network (MAN): A MAN is a network that covers a larger area than a LAN but is smaller than a WAN, such as a city or a campus. It may use technologies such as fiber optic cables or microwave links to connect devices.

- Personal Area Network (PAN): A PAN is a network that connects devices within a personal space, such as a smartphone or tablet to a wearable device like a smartwatch or fitness tracker.

- Wireless Local Area Network (WLAN): A WLAN is a LAN that uses wireless technology such as Wi-Fi to connect devices.

- Storage Area Network (SAN): A SAN is a specialized network that provides access to storage devices such as disk arrays and tape libraries.

- Virtual Private Network (VPN): A VPN is a secure network that uses encryption and authentication technologies to allow remote users to access a private network over the internet.

- Cloud Network: A cloud network is a network of servers and storage devices hosted by a cloud service provider, which allows users to access data and applications over the internet from anywhere.

These are just a few examples of the many types of networks that exist. The type of network that is appropriate for a particular application will depend on factors such as the size and location of the network, the type of devices being connected, and the level of security required.

Several different types of network architectures can be used to design and implement networks. Some of the most common types include:

- Client-Server Architecture: In a client-server architecture, one or more central servers provide services to multiple client devices. The clients access these services over the network, and the servers manage the resources and data.

- Peer-to-Peer Architecture: In a peer-to-peer architecture, devices on the network can act as both clients and servers, with no central server or authority. This allows devices to share resources and communicate with each other without relying on a single server.

- Cloud Computing Architecture: In a cloud computing architecture, services and applications are hosted on servers located in data centers operated by cloud service providers. Users access these services over the internet, and the cloud provider manages the infrastructure and resources.

- Distributed Architecture: In a distributed architecture, multiple servers are used to provide services to clients, with each server handling a specific function. This provides redundancy and fault tolerance, as well as better scalability.

- Hierarchical Architecture: In a hierarchical architecture, devices are organized into a tree-like structure, with a central node or root at the top, followed by intermediary nodes, and finally end devices at the bottom. This allows for efficient communication and management of devices in larger networks.

- Mesh Architecture: In a mesh architecture, devices are connected in a non-hierarchical, mesh-like topology, with multiple paths for communication between devices. This provides high fault tolerance and resilience, as well as better scalability.

- Virtual Private Network (VPN) Architecture: In a VPN architecture, devices in a network are connected over an encrypted tunnel, allowing users to access a private network over the internet securely.

Each type of network architecture has its advantages and disadvantages, and the appropriate architecture will depend on the specific needs and requirements of the network.

There are many different network protocols used for communication between devices in a network. Some of the most common types of network protocols include:

- Transmission Control Protocol (TCP): A protocol that provides reliable and ordered delivery of data over a network, ensuring that data is delivered without errors or loss.

- User Datagram Protocol (UDP): A protocol that provides a connectionless service for transmitting data over a network, without the reliability guarantees of TCP.

- Internet Protocol (IP): A protocol that provides the addressing and routing functions necessary for transmitting data over the internet.

- Hypertext Transfer Protocol (HTTP): A protocol used for transmitting web pages and other data over the internet.

- File Transfer Protocol (FTP): A protocol used for transferring files between computers over a network.

- Simple Mail Transfer Protocol (SMTP): A protocol used for transmitting email messages over a network.

- Domain Name System (DNS): A protocol used for translating domain names into IP addresses, allowing devices to locate each other on the internet.

- Dynamic Host Configuration Protocol (DHCP): A protocol used for dynamically assigning IP addresses to devices on a network.

- Secure Shell (SSH): A protocol used for secure remote access to a network device.

- Simple Network Management Protocol (SNMP): A protocol used for monitoring and managing network devices.

These are just a few examples of the many types of network protocols that exist. Different protocols are used for different purposes, and the appropriate protocol will depend on the specific needs of the network and the devices being used.

Each network protocol serves a specific purpose in facilitating communication between devices in a network. Here are some of the most common uses of the network protocols:

- Transmission Control Protocol (TCP): TCP is used for reliable and ordered delivery of data over a network, making it ideal for applications that require high levels of accuracy and completeness, such as file transfers or email.

- User Datagram Protocol (UDP): UDP is used when speed is more important than reliability, such as for video streaming, real-time gaming, and other applications that require fast response times.

- Internet Protocol (IP): IP is used for addressing and routing packets of data across the internet, enabling devices to find and communicate with each other.

- Hypertext Transfer Protocol (HTTP): HTTP is used for transmitting web pages and other data over the internet, making it the protocol of choice for browsing the World Wide Web.

- File Transfer Protocol (FTP): FTP is used for transferring files between computers over a network, making it a common protocol for sharing files between users or transferring files to a web server.

- Simple Mail Transfer Protocol (SMTP): SMTP is used for transmitting email messages over a network, enabling users to send and receive emails from different devices and email clients.

- Domain Name System (DNS): DNS is used for translating domain names into IP addresses, allowing devices to locate each other on the internet.

- Dynamic Host Configuration Protocol (DHCP): DHCP is used for dynamically assigning IP addresses to devices on a network, enabling devices to connect to a network and communicate with other devices.

- Secure Shell (SSH): SSH is used for secure remote access to a network device, enabling users to log in to a device from a remote location and perform administrative tasks.

- Simple Network Management Protocol (SNMP): SNMP is used for monitoring and managing network devices, enabling administrators to monitor device performance, configure devices, and troubleshoot issues.

In summary, network protocols play a critical role in enabling communication and data transfer between devices in a network. Each protocol has its specific purpose, and the appropriate protocol will depend on the specific needs of the network and the devices being used.

The protocols used in different network architectures can vary depending on the specific implementation and requirements of the network. Here are some examples of protocols commonly used in different network architectures:

- Client-Server Architecture: Protocols such as TCP, UDP, HTTP, SMTP, and FTP are commonly used in client-server architecture to enable communication between clients and servers.

- Peer-to-Peer Architecture: Peer-to-peer networks often use protocols such as BitTorrent, Direct Connect (DC), and Gnutella to facilitate file sharing and communication between devices.

- Cloud Computing Architecture: Cloud computing often relies on protocols such as HTTP, HTTPS, and REST (Representational State Transfer) to enable communication between clients and cloud services.

- Distributed Architecture: Distributed systems often use protocols such as Remote Procedure Call (RPC), Distributed Component Object Model (DCOM), and Message Passing Interface (MPI) to enable communication between different servers in the network.

- Hierarchical Architecture: Hierarchical networks often use protocols such as Simple Network Management Protocol (SNMP) to enable the management and monitoring of devices in the network.

- Mesh Architecture: Mesh networks often use protocols such as Ad-hoc On-demand Distance Vector (AODV), Dynamic Source Routing (DSR), and Optimized Link State Routing (OLSR) to enable communication between devices in the network.

- Virtual Private Network (VPN) Architecture: VPNs often use protocols such as Internet Protocol Security (IPsec), Secure Socket Layer (SSL), and Transport Layer Security (TLS) to provide secure and encrypted communication between devices.

These are just a few examples of the many protocols used in different network architectures. The appropriate protocol will depend on the specific needs and requirements of the network, as well as the devices and applications being used.

Here are some common networking technologies and a brief explanation of each:

- Ethernet: As explained earlier, Ethernet is a wired networking technology commonly used in local area networks (LANs) to transmit data packets between computers and other network devices over a physical network cable.

- Wi-Fi: Wi-Fi is a wireless networking technology that uses radio waves to connect devices to the internet or a local network without the need for physical cables. Wi-Fi networks are commonly used in homes, offices, public spaces, and other locations.

- Bluetooth: Bluetooth is a wireless networking technology used for short-range communication between devices, typically within a range of 10 meters. Bluetooth is commonly used for connecting wireless headphones, speakers, keyboards, and other devices to smartphones, tablets, and computers.

- NFC (Near Field Communication): NFC is a short-range wireless communication technology that allows two devices to exchange data when they are close to each other. NFC is commonly used for contactless payments, ticketing, and other applications.

- Cellular Networks: Cellular networks are wireless networking technologies used to connect mobile devices such as smartphones and tablets to the internet or a cellular network provider. These networks use radio waves to communicate between mobile devices and cellular network towers.

- VPN (Virtual Private Network): VPN is a technology that allows users to securely connect to a private network over the internet. VPNs use encryption and other security measures to protect data transmitted over the network, making them useful for remote workers, travelers, and others who need to access private networks from outside the organization.

- DNS (Domain Name System): DNS is a technology used to translate human-readable domain names (such as google.com) into IP addresses used by computers and other devices to connect to websites and services over the internet.

These are just a few examples of the many networking technologies available today. The appropriate technology will depend on the specific needs and requirements of the network and the devices being used.

Here are some common wired networking technologies and a brief explanation of each:

- Ethernet: Ethernet is a wired networking technology commonly used in local area networks (LANs) to transmit data packets between computers and other network devices over a physical network cable. Ethernet supports various speeds ranging from 10 Mbps to 100 Gbps or more.

- Fiber Optics: Fiber optics is a wired networking technology that uses glass or plastic fibers to transmit data over long distances at high speeds. Fiber optic cables are capable of transmitting data at speeds of up to 100 Gbps or more and are commonly used in internet backbone networks, data centers, and other high-speed networking applications.

- Coaxial Cable: Coaxial cable is a wired networking technology that uses a copper core surrounded by a shield to transmit data between devices. Coaxial cables are commonly used in cable television (CATV) networks and some older LANs.

- Powerline Networking: Powerline networking is a wired networking technology that uses existing electrical wiring to transmit data between devices. Powerline adapters plug into electrical outlets and use the wiring in the walls to transmit data at speeds of up to 2 Gbps.

- Ethernet over HDMI (EOH): EOH is a wired networking technology that uses HDMI cables to transmit Ethernet data between devices. EOH is commonly used in home theater systems and other applications that require high-speed data transfer over short distances.

- USB Networking: USB networking is a wired networking technology that uses USB cables to connect devices and transfer data between them. USB networking is commonly used to connect peripherals such as printers and external hard drives to computers.

These are just a few examples of the many wired networking technologies available today. The appropriate technology will depend on the specific needs and requirements of the network and the devices being used.

Ethernet is a family of wired networking technologies commonly used in local area networks (LANs). It is a standard for transmitting data packets between computers and other network devices over a physical network cable.

Ethernet was originally developed by Xerox Corporation in the 1970s, and it has since become a widely used technology in networking. Ethernet uses a protocol called the Ethernet protocol to transmit data packets over the network.

Ethernet networks typically use twisted pairs or fiber optic cables to transmit data between devices. These cables connect to a network switch or hub, which manages the traffic between devices.

Ethernet networks can support various speeds, ranging from 10 Mbps (megabits per second) to 100 Gbps (gigabits per second) or more. The most common Ethernet speeds used today are 10/100/1000 Mbps, also known as Gigabit Ethernet.

Ethernet is used in many different types of networks, including home and office networks, data centers, and internet service provider (ISP) networks. It is a reliable and widely adopted technology that has enabled the growth and development of modern computer networks.

Here are some fiber networking technologies with explanations:

- Fiber to the Home (FTTH): FTTH is a type of fiber networking technology that delivers high-speed internet, TV, and phone services directly to homes and businesses. FTTH uses optical fiber cables to transmit data at extremely high speeds, enabling users to enjoy reliable and fast connectivity.

- Fiber to the Building (FTTB): FTTB is similar to FTTH, but instead of connecting individual homes, it connects multiple units or businesses within a building to a fiber network. This technology is commonly used in multi-tenant buildings, such as apartments or offices.

- Fiber to the Curb (FTTC): FTTC uses fiber-optic cables to connect to a neighborhood's central distribution point or cabinet, and then uses copper wires to connect to individual homes or businesses. This technology is used to provide high-speed internet services to suburban areas.

- Passive Optical Network (PON): PON is a fiber networking technology that uses a single optical fiber cable to provide high-speed internet, TV, and phone services to multiple users. PON is a cost-effective solution for delivering high-speed connectivity to a large number of users in a single network.

- Dense Wavelength Division Multiplexing (DWDM): DWDM is a fiber networking technology that allows multiple wavelengths of light to be transmitted over a single optical fiber cable. This technology enables high-capacity transmission of data over long distances, making it ideal for applications such as telecommunications, data centers, and long-distance networking.

- Gigabit Passive Optical Network (GPON): GPON is a type of PON that uses a single optical fiber cable to provide high-speed internet, TV, and phone services to multiple users. GPON is capable of delivering data rates of up to 2.5 Gbps, making it an attractive solution for high-bandwidth applications.

These are just a few examples of fiber networking technologies, each with its strengths and weaknesses. The appropriate technology will depend on the specific application, location, and budget.

Here are some types of fiber networking with explanations:

- Single-mode fiber (SMF): Single-mode fiber is an optical fiber designed to carry a single ray of light, or mode, over long distances. It has a small core diameter, typically 8-10 microns, and is capable of transmitting data at extremely high speeds over long distances with minimal signal loss. SMF is commonly used in long-distance telecommunications and data center applications.

- Multimode fiber (MMF): Multimode fiber is an optical fiber designed to carry multiple modes of light simultaneously. It has a larger core diameter, typically 50 or 62.5 microns, and is capable of transmitting data over shorter distances than single-mode fiber. MMF is commonly used in local area networks (LANs) and other short-distance applications.

- Plastic optical fiber (POF): Plastic optical fiber is made of polymer materials rather than glass. POF has a larger core diameter than glass fiber, typically 1mm, and is capable of transmitting data at lower speeds over shorter distances. POF is commonly used in automotive and home networking applications.

- Active Optical Cable (AOC): Active Optical Cable is a type of fiber optic cable that integrates optical and electrical components to provide high-speed data transfer over longer distances than copper cables. AOC typically uses multimode fiber and is commonly used in data centers and high-performance computing applications.

- Fiber Distributed Data Interface (FDDI): FDDI is a high-speed networking technology that uses a ring topology to transmit data over optical fiber cables. FDDI is capable of transmitting data at speeds of up to 100 Mbps and is commonly used in mission-critical applications, such as banking and government networks.

These are just a few examples of fiber networking types, each with its advantages and disadvantages. The appropriate type will depend on the specific application, location, and budget.

Here are some common uses of different types of fiber networking:

- Single-mode fiber: Single-mode fiber is commonly used in long-distance telecommunications applications, such as transmitting data between cities or countries. It is also used in data centers for high-speed interconnects and storage area networks.

- Multimode fiber: Multimode fiber is commonly used in local area networks (LANs) and other short-distance applications. It is also used in data centers for high-speed interconnects and fiber channel storage area networks.

- Plastic optical fiber: Plastic optical fiber is commonly used in automotive and home networking applications, such as transmitting audio and video signals between devices.

- Active Optical Cable: Active Optical Cable is commonly used in data centers for high-speed interconnects and storage area networks. It is also used in high-performance computing applications, such as supercomputing and machine learning.

- Fiber Distributed Data Interface: FDDI is commonly used in mission-critical applications, such as banking and government networks, as well as in high-speed data center interconnects.

Overall, fiber networking is used in a wide range of applications where high-speed, reliable, and secure data transmission is required. Whether it's transmitting data across continents, connecting devices in a home network, or powering a supercomputer, fiber networking plays a critical role in modern communication and computing systems.

Here are some common wireless networking technologies and a brief explanation of each:

- Wi-Fi: Wi-Fi is a wireless networking technology that uses radio waves to connect devices to the internet or a local network without the need for physical cables. Wi-Fi networks are commonly used in homes, offices, public spaces, and other locations. Wi-Fi operates in frequency bands ranging from 2.4 GHz to 5 GHz, with different Wi-Fi standards supporting different speeds and features.

- Bluetooth: Bluetooth is a wireless networking technology used for short-range communication between devices, typically within a range of 10 meters. Bluetooth is commonly used for connecting wireless headphones, speakers, keyboards, and other devices to smartphones, tablets, and computers. Bluetooth uses the 2.4 GHz frequency band.

- NFC (Near Field Communication): NFC is a short-range wireless communication technology that allows two devices to exchange data when they are close to each other. NFC is commonly used for contactless payments, ticketing, and other applications. NFC operates at 13.56 MHz.

- Cellular Networks: Cellular networks are wireless networking technologies used to connect mobile devices such as smartphones and tablets to the internet or a cellular network provider. These networks use radio waves to communicate between mobile devices and cellular network towers. Cellular networks operate in various frequency bands depending on the network provider.

- Zigbee: Zigbee is a wireless networking technology commonly used in home automation and Internet of Things (IoT) devices. Zigbee operates in the 2.4 GHz and 900 MHz frequency bands and is designed for low-power and low-data-rate applications.

- Z-Wave: Z-Wave is a wireless networking technology similar to Zigbee, used in home automation and IoT devices. Z-Wave operates in the 900 MHz frequency band and is designed for low-power and low-data-rate applications.

These are just a few examples of the many wireless networking technologies available today. The appropriate technology will depend on the specific needs and requirements of the network and the devices being used.

Here are some networking technologies used for communication in space exploration:

- Deep Space Network (DSN): The Deep Space Network is a network of radio antennas located in three different locations on Earth (California, Spain, and Australia) and is operated by NASA's Jet Propulsion Laboratory (JPL). DSN is used to communicate with and receive data from spacecraft exploring our solar system and beyond.

- Laser Communication Relay Demonstration (LCRD): LCRD is a NASA project that aims to test the use of laser communication technology for communication between spacecraft in deep space. Laser communication has the potential to transmit data at much higher speeds than radio communication.

- Tracking and Data Relay Satellite (TDRS): TDRS is a network of communication satellites operated by NASA that provides continuous communication coverage to spacecraft in low-Earth orbit. TDRS satellites are used to relay data between the spacecraft and ground stations on Earth.

- Interplanetary Internet: The Interplanetary Internet is a communication system being developed by NASA that will enable communication between spacecraft and rovers exploring other planets and moons in our solar system. This system is designed to be resilient to the high latency and intermittent connectivity of deep-space communication.

These are just a few examples of the many networking technologies used for communication in space exploration. The appropriate technology will depend on the specific mission requirements and the distance and location of the spacecraft or rovers.

Here is a comparison of the space communication technologies mentioned earlier:

- Deep Space Network (DSN): Pros:

- Established and reliable technology

- Can communicate with multiple spacecraft simultaneously

- Supports a wide range of frequencies for communication

Cons:

- Limited bandwidth and data rates compared to newer technologies

- Long latency due to the distance between Earth and deep space spacecraft

- Limited coverage area and sensitivity to atmospheric conditions

- Laser Communication Relay Demonstration (LCRD): Pros:

- High data rates and bandwidth compared to radio communication

- More resilient to interference and jamming

- Can potentially reduce the size and weight of communication equipment on spacecraft

Cons:

- Requires line-of-sight communication and precise pointing of the laser beam

- Sensitive to atmospheric conditions and space debris

- Technology is still in development and not yet widely adopted

- Tracking and Data Relay Satellite (TDRS): Pros:

- Continuous communication coverage for low-Earth orbit spacecraft

- Supports high data rates and bandwidth

- Established and reliable technology

Cons:

- Limited coverage area outside of the low-Earth orbit

- It is costly to maintain and replace satellites

- Sensitive to atmospheric conditions and space debris

- Interplanetary Internet: Pros:

- Designed to be resilient to high latency and intermittent connectivity

- Supports a wide range of data rates and bandwidth

- Can potentially improve communication efficiency and reliability

Cons:

- Technology is still in development and not yet widely adopted

- Requires significant investment and infrastructure

- Limited coverage area and sensitivity to atmospheric conditions

In terms of the best approach for space communication, depends on the specific mission requirements and constraints. Each technology has its strengths and weaknesses, and the appropriate technology will depend on distance, location, data rates, bandwidth, and reliability requirements. For example, the Deep Space Network may be more appropriate for long-range communication with multiple spacecraft, while laser communication may be more suitable for high-bandwidth communication over shorter distances. Ultimately, a combination of these technologies may be used to provide a comprehensive communication network for space exploration.

In conclusion, networking technology has come a long way since its early days, and it has had a profound impact on our daily lives. As we look to the future, new technologies will continue to emerge, offering even greater benefits and transforming the way we live, work, and communicate with each other.

The Evolution of Programming Languages: Past, Present, and Future

Programming has come a long way since its inception in the 19th century, with new technologies and innovations driving its evolution. In this blog, we explore the history of programming, the types of programming languages, the future of programming, the role of AI in programming, and the role of popular IDEs in modern programming.

Programming is important because it enables us to create software, websites, mobile apps, games, and many other digital products that we use in our daily lives. It allows us to automate tasks, solve complex problems, and create innovative solutions that improve our lives and businesses. In today's digital age, programming skills are in high demand and are essential for success in many industries, from tech to finance to healthcare. By learning to code, we can open up a world of opportunities and take advantage of the many benefits that technology has to offer.

Initially, programming was done using punch cards and it was a tedious and time-consuming task. But with the invention of computers, programming became more accessible and efficient. In this blog, we will take a closer look at the evolution of programming languages, the history of programming, types of programming languages, the future of programming, the role of AI in programming, and the role of IDEs popular for programming.

History of Programming

The history of programming dates back to the early 19th century when mathematician Ada Lovelace created an algorithm for Charles Babbage's Analytical Engine, which is considered the first computer. However, the first actual programming language was developed in the 1950s, called FORTRAN (Formula Translation). This language was used for scientific and engineering calculations.

In the 1960s, programming languages such as COBOL (Common Business-Oriented Language), BASIC (Beginners All-Purpose Symbolic Instruction Code), and ALGOL (Algorithmic Language) were developed. These languages were used to write applications for business and research.

The 1970s saw the development of languages such as C and Pascal, which were used to write operating systems and applications. In the 1980s, the first object-oriented language, Smalltalk, was created. This language allowed developers to create reusable code and was used for graphical user interfaces.

The 1990s saw the development of scripting languages such as Perl and Python, which were used for web development. In the early 2000s, languages such as Ruby and PHP became popular for web development. Today, programming languages such as Java, C++, Python, and JavaScript are widely used for various applications.

Logic plays a fundamental role in programming. Programming is essentially the process of writing instructions for a computer to follow, and these instructions must be logical and well-organized for the computer to execute them correctly.

Programming requires logical thinking and the ability to break down complex problems into smaller, more manageable parts. Programmers use logic to develop algorithms, which are step-by-step procedures for solving problems. These algorithms must be logical and accurate, with each step leading logically to the next.

In programming, logical operators and conditional statements are used to control the flow of a program. Logical operators such as AND, OR, and NOT are used to evaluate logical expressions and make decisions based on the results. Conditional statements such as IF, ELSE, and SWITCH are used to execute different parts of a program based on specific conditions.

Thus, logic is a critical component of programming. Without it, programs would not work correctly or produce the desired results. By developing strong logical thinking skills, programmers can write efficient and effective code that solves complex problems and meets the needs of users.

Types of Programming Languages

Programming languages can be broadly classified into three categories:

- Low-Level Languages: These languages are closer to the machine language and are used to write operating systems, device drivers, and firmware. Examples include Assembly Language, C, and C++.

- High-Level Languages: These languages are easier to learn and use than low-level languages. They are used to write applications, games, and websites. Examples include Java, Python, and Ruby.

- Scripting Languages: These languages are used to automate repetitive tasks, such as web development and system administration. Examples include Perl, Python, and Ruby.

Here is a list of programming languages and a brief explanation of each:

- Java: Java is a high-level, object-oriented programming language developed by Sun Microsystems (now owned by Oracle Corporation). Java is designed to be platform-independent, meaning that Java code can run on any computer with a Java Virtual Machine (JVM) installed. Java is widely used for developing web applications, mobile apps, and enterprise software.

- Python: Python is a high-level, interpreted programming language that emphasizes code readability and simplicity. Python is widely used for scientific computing, data analysis, web development, and artificial intelligence.

- C: C is a low-level, compiled programming language that is widely used for systems programming and embedded systems development. C is known for its efficiency and control over hardware, making it an ideal choice for developing operating systems, device drivers, and firmware.

- C++: C++ is an extension of the C programming language that adds support for object-oriented programming. C++ is widely used for developing high-performance software, including operating systems, games, and scientific simulations.

- JavaScript: JavaScript is a high-level, interpreted programming language that is widely used for developing web applications. JavaScript runs in web browsers and provides interactivity and dynamic behavior to web pages.

- Ruby: Ruby is a high-level, interpreted programming language that emphasizes simplicity and productivity. Ruby is widely used for web development, automation, and scripting.

- Swift: Swift is a high-level, compiled programming language developed by Apple Inc. Swift is designed for developing applications for iOS, macOS, and watchOS. Swift is known for its safety, speed, and expressiveness.

- PHP: PHP is a server-side, interpreted programming language that is widely used for developing web applications. PHP is known for its simplicity and ease of use, making it a popular choice for web developers.

- SQL: SQL (Structured Query Language) is a domain-specific language used for managing relational databases. SQL is used to create, modify, and query databases, and is widely used in business and data analysis.

- Assembly language: Assembly language is a low-level programming language that is used to write instructions for a computer's CPU. Assembly language is difficult to read and write, but provides direct access to hardware and can be used to write highly optimized code.

There are many other programming languages in use today, each with its strengths and weaknesses. Choosing the right programming language for a particular task depends on a variety of factors, including the requirements of the project, the developer's experience and expertise, and the availability of tools and libraries.

Here are some examples of low-level programming languages and a brief explanation of each:

- Machine Language: Machine language is the lowest-level programming language that a computer can understand. It is a binary code consisting of 0's and 1's that correspond to machine instructions. Each computer architecture has its specific machine language, which is difficult to read and write.

- Assembly Language: Assembly language is a low-level programming language that is easier to read and write than machine language. Assembly language uses mnemonics to represent machine instructions, making it more human-readable. Assembly language programs are translated into machine language by an assembler.