Cataloguing Strategic Innovations and Publications

Surfing a digital wave, or drowning

Information technology is everywhere. For companies’ IT departments, that is a mixed blessing

His days of derision are long gone: now geeks are gods. Amazon, Apple, Facebook, Google, and Twitter are reinventing how mere mortals converse, read, play, shop, and live. To thousands of bright young people, nothing is cooler than coding the night away, striving to turn their startup into the next big thing.

A little of this glamour should by rights be lighting up companies’ information-technology departments, too. Corporate IT has been around for decades, growing in importance and expense. Its bosses, styled for 20-odd years as chief information officers, may perch only a rung or two from the top of the corporate ladder.

However, IT departments have, in many non-tech firms, remained hidden away, automating unexciting but essential functions—supply chains, payroll, and so forth. And by now, this digitizing of business processes “has played itself out in a lot of enterprises,” says Lee Congdon, the chief information officer of Red Hat, a provider of open-source software.

There is still plenty going on in the back office: the advent of cloud computing means that software can be continually updated and paid for by subscription and that fewer companies will need their data centers. But the truly dramatic change is happening elsewhere. Demands for digitization are coming from every corner of the company. The marketing department would like to run digital campaigns. Sales teams want seamless connections to customers as well as to each other. Everyone wants the latest mobile device and to try out the cleverest new app. And they all want it now.

Rich prizes beckon companies that grasp digital opportunities; ignominy awaits those that fail. Some are seizing their chance. Burberry, a posh British fashion chain, has dressed itself in IT from top to toe. Clever in-store screens show off its clothes. Employees confer on Burberry Chat, an internal social network. This may explain why Apple has poached Angela Ahrendts, Burberry’s chief executive, to run its shops.

In theory, this is a fine opportunity for the IT department to place itself right at the center of corporate strategy. In practice, the rest of the company is not always sure that the IT guys are up to the job—and they are often prepared to buy their IT from outsiders if need be. Worse, it seems that a lot of IT guys doubt their ability to keep up with the pace of the digital age. According to Dave Aron of Gartner, a research firm, in a recent survey of chief information officers around the world just over half agreed that both their businesses and their IT organizations were “in real danger” from a “digital tsunami”. “Some feel excited, some feel threatened,” says Mr. Aron, “but nobody feels like it’s boring and business as usual.”

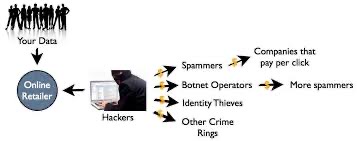

One reason for worry is that IT bosses are conservative by habit and with good reason. Above all they must keep essential systems running—and safe. Those systems are under continual attack. If they are breached, the head of IT carries the can. More broadly, IT departments like to know who is up to what. Many of them gave up one battle long ago, by letting staff choose their smartphones (a trend known as “bring your device”). When the chief executive insists on an iPhone rather than a fogeyish BlackBerry, it is hard to refuse.

That has been no bad thing, given the enormous number of applications being churned out for Apple’s devices and those using Google’s Android operating system, many of which can do wonders for productivity. The trouble lies in keeping tabs on all the apps people like to use for work. With cloud-based file-sharing services or social media, it is easy to share information and switch from a PC in the office to a mobile device. But if people are careless, they may put confidential data at risk. They may run up bills as well. Many applications cost nothing for the first few users but charges kick in once they catch on.

Impatient marketers

The digital world, however, runs faster than the typical IT department’s default speed. Other bits of the business are not always willing to wait. Marketing, desperate to use digital wiles to woo customers and to learn what they are thinking, is especially impatient. Forrester, another research firm, estimates that marketing departments’ spending on IT is rising two to three times as fast as that of companies as a whole. Almost one in three marketers think the IT department hinders success.

The IT crowd worries that haste has hidden costs. The marketers, point out that Vijay Gurbaxani of the Centre for Digital Transformation at the University of California, Irvine, will not build in redundancy and disaster recovery so that not all is lost if projects go awry. To the cautious folk in IT departments, this is second nature.

A lack of resources does not help. Corporate budgets everywhere are under strain, and IT is often still seen as a cost rather than as a source of new business models and revenues. A lot of IT heads, indeed, report to the chief financial officer—although opinions differ about how much formal lines of command matter. But even if money is not in short supply, bodies are. When the whole company is looking for new ways to put technology to work, the IT department cannot do it all.

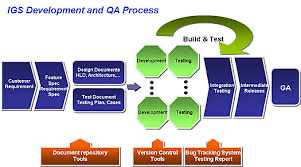

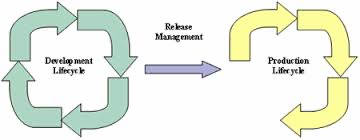

In different ways, a lot of companies have decided that it shouldn’t. Many technology-intensive organizations have long had chief technology officers, who keep products at the cutting edge while leaving chief information officers in charge of the internal plumbing. Lately, a new post has appeared: the chief digital officer, whose task is to seek ways of embedding digital technology into products and business models. Gartner estimates that 5-6% of companies now have one. About half practice some form of “two-speed IT”.

If the chief information and digital officers work nicely together, it’s “fantastic,” says Didier Bonnet of Capgemini, a firm of consultants. He points to Starbucks, where such a pair have operated in tandem since last year. The chief digital officer, Adam Brotman, oversees all the coffee chain’s digital projects, from social media to mobile payments, which used to be spread around different groups. But there are also examples, Mr. Bonnet adds, of conflict, which “can slow you down rather than speed you up”. Whatever the digital team comes up with still needs to fit in with the business’s existing IT systems.

So IT chiefs somehow have to let a thousand digital ideas bloom while keeping a weather eye on the whole field. At Dell, a PC-maker shifting towards services and software, Adriana Karaboutis, the chief information officer, says that she works closely with the marketing department: people there have developed applications that, once screened by the IT team, have ended up in Dell’s internal and external app stores. With such cooperation, says Ms. Karaboutis, “people stop seeing IT as something to go around, but as something to partner with.”

Corporate IT bosses are right to fear being overwhelmed. But cleaving to their old tasks and letting others take on the new unsupervised ones is not an option. Forrester calls this a “titanic mistake”. The IT department is not about to die, even if many functions ascend to the cloud. However, those of its chiefs who cannot adapt may fade away.

The Future of Information Technology

For any business or individual to succeed in today’s information-based world, they will need to understand the true nature of information. Business owners will have to be information-literate entrepreneurs and also their employees will have to be information-literate knowledge workers. Being a piece of an information literate means you can define what information is needed, know how and where to obtain the information, understand the meaning of the information once received, and can act appropriately based on the information to help your business or organization achieve its goals.

As the world develops, new emerging information technologies will pop up on the market, and for businesses to gain a competitive advantage; they will have to learn how to use information technology to their advantage. Employees must become literate about the latest information technology so that they can cope with demanding challenges at work.

Information technology as a subject will not change, it’s the tools of information technology that will change, more technologies will be developed to simplify the way we use IT at work, at home, and everywhere in our lives.

Information can be described in four different ways and these include; Internal, External, Objective, and Subjective. Internal information describes specific operational aspects of the organization or business, External information describes the environment surrounding the organization, Objective information describes something that is known and Subjective information will simply describe something unknown.

Business owners will have to know what their customers want and provide services or products in time. Technically I can term this” The Time Dimension ”.

The time dimension of information involves two major aspects and these include; (1) providing information when your customer wants it. (2) Providing information that describes the period your customer wants.

Information technology tools like computers will still be useful in the future and these computers will change their functionality with the main goal of improving the way we do business or transfer information. Institutions like Banks, Schools, Shopping Malls, and Government agencies will all have to use new information technology tools to serve their users based on the needs and expectations of their users.

Future information technology will change the face of business. Already we have seen how current information Technology has shaped the e-commerce world. Now with services like Google Wallet and Squareup, buyers can easily turn their mobile phones into payment gateways, the introduction of these new e-commerce payment gateways has shaped our e-commerce world and more technologies will merge as consumer demands increase with time. In brief, let’s look at some examples of information technology tools that will shape our future and simplify our lives.

(1) Google Wallet: Google Wallets will enable you to use any smartphone to purchase products online. It supports all credit and debit cards. The good thing about Google Wallet is that it will enable you to store all your cards online so that they’re with you wherever you go.

(2) Squareup: Also square-up technology will enable you to make online transactions using your mobile phone. As a user, you will only pay 2.75% per swipe, and there will be no additional fees or next-day deposits. This is a great tool for business, it is flexible and affordable. Square works with Android and iOS smartphones.

More technologies will emerge as the world develops because our demands will change with time. So it is up to us to be literate and learn how to take advantage of future Information Technology.

Use of Technology in Classroom – Students Demand It

The increased use of technology in our daily lives has forced students to demand their right to use technology in the classroom. Many schools and teachers have been reluctant to integrate the use of technology into their education circular. Out of school, students interact with various technological tools like tablets, computers, and smartphones which can be used to simplify the way they learn. There is great tension on which technology should be used in schools, students have their needs, but also parents and teachers are debating on this particular subject.

For the teachers, it will demand technical training, so that they get to know how to use technology in the classroom. Some of these educational technologies like computers or smart whiteboards are easy to learn, but the trouble is mastering how to use them for more than just one function to meet both the teacher’s needs and the student’s needs.

As the world develops, new opportunities are coming up and for our students to get a chance to compete in tomorrow's demanding job market, they will need to know how to use technology in various ways, and this has forced them to demand the use of technology in schools so that they get prepared for tomorrows challenge.

Technology can simplify the way students learn both in the classroom and outside the classroom. It makes education mobile and flexible, so in this case, students can have a chance to study from wherever they want. Technology makes education more personal because students will have the ability to do research and read on their own at any time of the day. So let's see how students are using technology for their personal use, and how can schools take advantage of their technical know-how.

They use Tablets to read news and watch videos: Tablets are flexible and easy to use, since they have the capability of accessing the internet from anywhere, it is very easy for students to find the information they need in an instant. So, how can schools and teachers take advantage of this? I think teachers can also jump on the same technology and offer their students coursework and other academic information via these tablets. For example, a teacher can create a classroom blog, where they post notes and assignments for students; they can even suggest e-books to download to aid the student’s research on a specific subject. On the same classroom blog, a teacher can integrate video and image illustrations on specific subjects, this can help students learn easily.

They use smart mobile phones to listen to audiobooks: All smartphones can access the internet and they have big storage hardware which can store downloaded audiobooks. Many students are downloading these audiobooks for their personal use. You can simply listen to an audiobook as you’re exercising or doing some other activity. Audiobooks simplify the way we learn, for example, a student can download a novel and listen to the author as they attend to other activities. So, how can schools and teachers take advantage of this technology? I think teachers can create audio notes that students can download and listen to at any time. This can simplify the way students access academic information and it also improves the way they learn. The private business community has invested money in mobile educational applications that can be downloaded on mobile phones, most of these mobile Apps, can allow students to access digital libraries.

They use the Internet to connect with friends: Students spend most of their time connecting and sharing their personal lives via the Internet. Social networks like Facebook.com enable students to discover their old friends and also connect with new friends. So, how can we use social network technology in our education system? In my opinion, schools or teachers can use educational social networks like ”Piazza.com” to interact with their students. On Piazza, teachers can manage coursework, monitor students' performance, and assign coursework to students and students can use the same platform to ask questions and get answers on instantly. Platforms like Facebook can connect teachers with their students in a social manner.

In conclusion, students are not waiting for teachers or their schools to integrate technology into their classrooms, most of these students are taking online courses that can prepare them for tomorrow’s technologically competitive world.

How To Use Technology – 100 Proved Ways To Use Technology

Technology keeps on advancing and it is becoming very essential in our lives, everyday people Use Technology To improve the way they accomplish specific tasks and this is making them look more smarter. Technology is being used in many ways to simplify every aspect of our lives. Technology is being used in various sectors. For example, we use technology in education to improve the way we learn, we use technology in business to gain competitive advantage and to improve customer care services and relationships, technology can be used in agriculture to improve agricultural outputs and to save time,

we use technology in classrooms to improve the way our students learn and to make the teacher's job easier, technology is also used in health care to reduce on mortality rate, we use technology for transportation as a way of saving time, we use technology in communication to speed the flow of information, technology is being used for home entertainment, we use technology at the workplace to spend less time working and to increase production. Their so many ways in which humans can use technology and I have listed over 100 ways how technology is being used in our lives.

BUSINESS COMMUNICATION

Communication is a very important factor in a business. Business owners, managers, and employees need good communication technology to enable them to transfer information that might be needed to make decisions. The flow of information within or outside of the business will determine the growth of that business. It does not matter how big or small the business might be, communication is very necessary, for example, business owners need to communicate to their customers on time, they also need to communicate to their suppliers or business partners, they also have to communicate with their employees daily to know about the activities in the firm. This sounds like a lot of responsibility, but with technology, all this can be done on the same day with less stress on the business owner. Businesses can use communication technology tools like electronic mail ‘’email’’, mobile videoconferencing, fax, social media networks, mobile phones, and text messaging services to communicate with everyone in a single day. Below I have listed some detailed points on the use of technology in business communication.

1. Use Sharepoint or Intranet Networks: Both big and small businesses will find a great need to have an internal intranet network. A website used by employees and business owners at work only, intranet websites or portals can not be accessed from outside the company because they are hosted on a local company server, and this helps the business exchange information with its employees without exposing it to the World Wide Web (www). Many companies have these networks and employees have intranet emails used for communication at work only. Business managers can easily draft a message and send it to all employees via an intranet network; also employees can use the same network to share information within the business. This process protects information and it also facilitates the flow of information within the business. Big companies like Apple, Microsoft, Dell, and IBM still use these intranet networks to communicate with their employees.

2. Use Instant Messaging Services: Many small business owners have found instant messaging as a valuable and affordable tool that makes communication easy, text messaging is far more effective than electronic mail, though with email communication technology you can send big data files which you can not do with instant text messaging services. However, instant messaging can be used when a simple message needs to be passed over to any party in the business, it could be from the business manager to employees or from employees to the business manager. The good news is that most of these instant messaging services can be used at zero cost, for example, Yahoo Messenger, Google Chat, Skype, and other services.

3. Use Electronic mail communication: Electronic mail ”Email” is a default communication technology for every business and organization. On every business card, you will see an email address in the company name of that business. For example, if someone owns a Web Agency, you will see an email from the Web Agency in this format (sales@thatwebangency.com). Emails are used to communicate with employees, suppliers, customers, and business managers. Unlike text messaging, emails are for professional messages and they can be used to transfer big files of data, the size of files that can be transferred via email will be determined by your email hosting company, but in most cases, they range between 1MB – 5GB per file. To look professional, avoid free mail hosting business services, and make sure you have a customized email in your company name, this looks professional and it will also help in the marketing of your website.

4. Use Telephone Communication: Just like email also telephones are standard business communication tools. In normal circumstances, businesses have both fixed telephone lines for offices and mobile phones. Fixed telephone lines are used during working hours and some well-established businesses dedicate a phone assistant to simply answer any business calls that come in during working hours. People who call on fixed lines are commonly customers or business suppliers, these fixed telephone lines also have voice mail recorders which can be replayed during working hours. Mobile phones, seem to be personal gadgets, so communication via mobile phones is commonly between business owners and their business partners or employees.

5. Use Social Media: Both companies and consumers use special social media to communicate. Well-established businesses use company-based social networks like Yammer.com, as of now, Yammer.com is being used by more than 200,000 companies worldwide. For those who are not aware of this network, it’s an enterprise social network, basically created for companies and employees to exchange business and work-related information. You can only use this network if you have a custom company email address, so only people with a verified company email address can join your company network.

Then we also have consumer-based social networks like facebook.com, on this network, businesses create customer service pages that they use to interact with their customers in real-time. The integration of your business with consumer-based social networks will help it in improving your customer care service and it will also help you reach more potential customers.

6. Use Fax machines: Not every business uses a fax machine, but as your business expands and grows bigger, you will find yourself in need of a fax machine. Fax machines are used to send and receive files over a telephone line. Then we also have e-fax machines that receive files from emails. Just like text messaging, a fax machine will deliver a printed message in an instant, although you will have to be at the office to receive this message. Fax machines are one of the first and oldest communication technologies used in business communication.

7. Use Multimedia tools: This is recorded content that can be used in a meeting or can be used by human resource managers to train new employees. Messages are transferred in the form of recorded videos or audio and they can be accessed via computers smartphones or smart whiteboards at work. Very few businesses use this type of communication, though it also has its impact on business communication. Videos or audio messages can be uploaded on intranet networks so that only staff members get access to these Media.

8. Use Voice mail machines: It is commonly known as a message bank. This is a centralized system that helps in storing telephone messages which can be retrieved during working hours. In most cases, these voicemail systems are installed on fixed business telephone lines which are used by customers and other business associates, so if a business does not work 24 hours, they can leave their telephone line on voice mail. Then in the morning, the person in charge can retrieve the recorded phone calls and act immediately.

9. Use Teleconferencing / Video Conferencing Tools: Technology has changed the way business owners communicate with their employees or business partners. Now communication technologies like mobile video conferencing which enable a business owner to hold a business meeting using a smartphone or a mini tablet like the iPad while traveling have made business communication so easy and flexible. tele conferencing software like ”SKYPE or Vidyo” can be used on any smartphone with a webcam.

BUSINESS

10. Use Technology to Save Time: Both small and big organizations use technology to save time. Time is a very crucial factor in business. Many business managers use technology to hold meetings via videoconferencing tools, employees use technology at work to complete tasks on time, and technology is used to speed up the flow of information within an organization and this helps in the process of decision-making among employees and business managers. Some businesses or organizations have automated some sectors and others have equipped their employees with technological tools like computers to help them speed up their tasks while at work.

11. Use Technology To Transfer Information: The rate and speed at which information moves within and outside the organization or business will determine the growth of that business. Well-equipped organizations or businesses have used technology to create centralized data networks, via these networks, information can be stored both remotely or internally, and employees or managers of that organization can retrieve that information at any time to help them make analytical business decisions. Making decisions in a business is based on facts and data, so with a centralized database of information, the process of accessing and analyzing data becomes simple.

12. Use Technology To Gain a competitive advantage: Business competition is healthy because it results in business growth. During the process of competing for specific markets, customers in that market tend to get the best services as an incentive to win their loyalty. Technology has helped small businesses compete with big well-structured businesses. Unlike, in the past when some productive technologies could only be accessed by wealthy companies, today, also small businesses can use simple technology to gain a competitive advantage. With technology, it is not about who has the best technological tools, but how you use them to serve your clients. We have seen many wealthy companies with advanced technologies losing markets to small businesses, simply because they can use the technology they have to serve their customer's demands.

13. Use Green Technology to save the environment: Our feature depends on the survival of our environment. Many green technologies have been developed for future use and development. For example, we have technologies like green electric cars which will no longer depend on fuel but they can be charged with solar power or wind energy while in motion. The more we exploit Mother Nature for natural resources the more our environment will be at risk. Many technological companies have started changing their manufacturing technologies to reduce air pollution and they now produce environmentally friendly technological products like green computers which use less power.

14. Use Mobile Videoconferencing: Communication is one of the most important factors in development, for our small businesses to survive in the future, we need to use advanced communication tools like mobile videoconferencing. In this case, you do not have to worry about being late for a meeting which might create a positive impact on your business growth. Smartphones and portable gadgets like the Mini iPad can make mobile conferencing possible in the future. Just imagine yourself being in a meeting while on a plane or a train. This is one of the smartest communication technologies that should be used for future business development; technological companies like Vidyo.com have made this possible.

15. Use Mobile Learning: iBooks have made learning mobile, text on iPads, Kindle Fire, and other tablets is clear and these portable gadgets have big storage space of about 65GB and more, this is enough storage space to keep all your books, videos, and audio notes. Unlike computers which are heavy to carry, smartphones and mini tablets like the iPad Mini will make it easy for students to learn and access academic information in the future. For now, these technologies are expensive, but tomorrow, we shall have more students learning via their mobile gadgets. Not only can you read textbooks via these smart tablets, but you can also place orders for electronic books using the internet, and you can simply download the book after placing the order.

TEACHING AND LEARNING PROCESS

16. Use automated programs: Many teachers have issues with assessing student's work and grades, you might find one teacher has to grade over 60 students, analyze their work, comment and suggest areas of improvement. This sounds like a lot of work for one person, and they have to be accurate because any mistake made in a student’s grade can affect their future. We live in an economy, where less money is spent on reducing the sizes of classes, so you will find that many teachers are stressed out with big classrooms, the workload is too much yet the payment is also little. So as a teacher, you can use technology to automate some processes. For example, you can use tools like Piazza.com to manage your students’ coursework, track their performance, and also assign them work. On the same network, you can create a virtual classroom, where students can post and answer questions.

17. Use grammar tutorials and puzzles to teach English: Speaking English and writing in English is completely different. Some students are so good when it comes to spoken English, but very poor when it comes to composing a good sentence. These students find it difficult to understand some grammatical principles even when taught by a good teacher. So the best way of making them learn is by giving them grammar tutorials and videos. Technology will help teachers reveal the burden of attending to each student with a special grammar need, in general, the teacher can use grammar puzzles or games in the classroom, and students will participate in a fan way, yet they will be learning. Teachers can also use computer word processing applications to correct student’s grammar; every computer has a word processing application like ”Microsoft Word ”

18. Use tracking software to monitor students' writing skills: Teachers can use writing software like ”Essay Punch” to help students learn how to write an informative essay. Essay Punch helps students with guides on how to write a short essay that describes, persuades, or informs. The software comes with a menu of topics; students can choose any topic from this menu and start working on their writing skills. When the essay is complete, the student is guided in the process of re-writing the essay, editing, outlining, organizing the essay, and publishing the essay. Then the teachers can use a record management system to monitor their students’ progress.

19. Use tablets for visual illustrations in the science classroom: Teaching a science classroom will require visual illustrations. It is very difficult to explain every science topic in text format. So teachers can find great use of tablets in the science classroom. Since these tablets are expensive, teachers can group students to share a tablet. In this case, students can form groups of 3-4 students to share a tablet, then the teacher can control data derived on these tablets using an internal network with the science classroom. Teachers can use this internal network, to send illustrations and visual data on tablets.

20. Use the Internet to publish students' work: Teachers can use the Internet to publish the works of bright students; this will inspire other students in the classroom. This work can be published on a classroom blog, slideshare.net, or Google Docs, or it can also be published as an e-book for other students to download. This process will encourage good students to write better essays so that their works can be read by others.

TECHNOLOGY IN THE WORKPLACE

21. Use Technology to improve efficiency at work: Technology can change the way we do most of our work and it can also reduce the stress we get because of the many tasks we have to do in one day. Employees can perform more than one task using technology, for example, a secretary can compose a mail to be sent to all employees, yet at the same time, they can make a call to a supplier or a customer within the same time. This employee is using three types of technologies, internet, and computer to compose an electronic mail, then they also use a telephone to contact the supplier of the customer.

22. Use communication technology to improve information flow at the workplace: Communication technology improves the way we interact with each other at work. Tools like the internet, text messaging services, telephone, enterprise social networks like (Yammer.com), e-fax machines, and many more tools facilitate the flow of information at the workplace. Decision makers at the workplace will depend on the speed of data flow to make quick decisions that might be of benefit to the company's growth.

CUSTOMER CARE SERVICE:

23. Respond to customer needs on time: Every business survives on its customers, the more clients you have the more successful your business will become. So it is very important to serve your clients on time and also tailor products and services based on their needs. Use internet technology to get responses on what your customers need, create a company website to collect data from your customers, and make sure that your customers can contact you directly via your websites. Respond to your customers’ requests on time, some companies have full-time online assistants who will handle orders, complaints, and suggestions from customers. Build your business with your customers then you will be the winner of all time.

24. Improve payment systems: The module of payment will also be part of your customer service. Use technology to improve the way people pay for your services or products. Today we have various methods of payment, i.e. PayPal, smart car payments, and mobile phone payments (Google Wallet and Square ). If a client like your service or product, they will want to place an order, so this process has to be very simple, the more payment options you give your clients, the more money you will make, and also your clients will be pleased by the good customer service.

WAYS TO USE TECHNOLOGY IN MARKETING

For any business to succeed it has to market its services or products. However, some advertising Media can be too expensive for small businesses. But with technology, also small businesses can reach targeted markets and gain a competitive market. In the past years, I have seen small businesses like Instagram.com gaining a competitive advantage over big well-established tech companies like Facebook and Twitter. This success shows you that, if you market your product or service well, consumers will come and they will not mind if you’re small or big, as long as you provide what you promise. So let’s see how you can use technology to market your small business and spend less.

25. Use electronic mail marketing: Many times marketers have debated on the effectiveness of email marketing, some think it will die very soon, but I think, nothing will ever replace private life, and email communication is private. So the best way to engage with your clients and avoid your messages from going to the spam filter is by creating a website, and then persuading your readers or customers to subscribe for updates or shopping deals. Once the user is subscribed to your emails, they will receive all notifications in their inbox; you can use this opportunity to increase sales by offering special discounts and shopping coupons to your email subscribers

26. Use social media marketing: The field of marketing has been changed by the wave of social Media. Top social media like Facebook, Twitter, LinkedIn, Google Plus, and Pinterest drive massive traffic to both small and big businesses. The trick is to define your target market and also know which content attracts them. Recent studies show that Pinterest and Facebook are driving lots of traffic to e-commerce sites. When you look at a site like Pinterest, most of the users are women who share things like clothes, shoes, bags, cakes, wedding ideas, and much more, to promote your business on Pinterest or Facebook, you will need to know which content to use. On Facebook, you can run a targeted advertisement for as low as $100 and reach the people you need.

EDUCATION:

27. Online Education: e-Learning has changed the face of education worldwide, unlike in the past when students and educators were bound by physical boundaries, today, internet technology has played a big role in making education effective. Many colleges and universities provide online professional courses like ACCA and this has helped many students from developing countries gain access to internationally recognized courses which also increases the chances of these students to compete for Jobs internationally. Also, adults who want to go back to school, have used online education facilities to enable them to study from their homes after work, some lessons can be downloaded as podcasts or videos, so students can learn at any time anywhere.

28. Use of computers in education: To a certain extent, computers help students learn better and they also simplify the teacher’s job. Computers are used to write classroom notes, create classroom blogs, play educational video games and puzzles, access the internet, store academic information and so much more. Many schools have set up computer labs where students are taught computer basics, and then some private schools have equipped their students with computers in the classroom. Teachers use computerized smart whiteboards which can help them explain subjects using visual illustrations, these smart whiteboards can also save teachers' work for later use.

29. Use the Internet for educational research: Both students and teachers use the Internet for research purposes. In most cases textbooks have little information about specific subjects, so students and teachers use the Internet to do extensive research. Search engines like Google.com & Bing.com are being used to find great educational content online. Also, community-edited portals like Wikipedia.org have vast amounts of educational content that can be used by both students and teachers for educational purposes or reference purposes. Video streaming sites like Youtube.com are being used in the classroom to derive real-time visual illustrations or examples on specific subjects.

HUMAN RESOURCE MANAGEMENT:

30. Use Online Recruitment Services: Many companies are using the Internet to recruit professionals. Well-known social media like Linkedin.com and Facebook.com have helped many companies discover talented employees. Job search engines like indeed.com also make it easier for job listing portals, because, talented employees use these job search engines to find listed jobs on various job portals on the internet. Some job portals also require applicants to post videos about themselves, and submit their academic papers and recommendation letters from past employers, which helps the human resource manager to easily get a clue about each job applicant. This process saves time and human resource managers get a chance to meet talented employees.

31. Use Electronic Surveillance to Supervise Employees: When the business gets big, you will end up with many employees and it will be difficult to keep track of them. So some companies have decided to install electronic surveillance devices that can monitor the performance of all employees. It is quite funny, but humans will perform better under supervision, it is very difficult to find self-motivated employees, most of them come to their workplace to pass the time and wait for a paycheck at the end of the month, so using an electronic surveillance system can ensure that all employees complete their tasks.

HOW TO USE TECHNOLOGY IN HEALTH CARE

32. Use Technology for Research Purposes: Many healthcare professionals use the internet to search for information. Since this internet can be accessed from anywhere, doctors or nurses can do their research work at any time. Even though some of the information published online is not that accurate, the little available approved information can help nurses, doctors, and other healthcare professionals dig deep into certain causes. Popular healthcare information-based websites like www.webmd.com and www.mayoclinic.com have played a big role by publishing relevant healthcare information online. Data published on these two portals is written by experienced doctors and this makes the data relevant.

33. Use Technology to improve treatment and reduce pain: The use of technology in healthcare facilities has changed the way patients are being treated, it speeds up the process of treating a patient and it also helps remove any pain that might cause discomfort to the patient. Machines are being used in surgical rooms and this has reduced the human risks while performing surgery.

34. Use Technology to improve patient care: Technology is being used to manage patient information effectively; nurses and doctors can easily record patient data using portable devices like tablets. This data can be stored on an internal database, so it becomes easier to mine data about each patient. Doctors always depend on the history of patients, so this stored information on an internal database within the hospital will make it simple for Doctors to make quick decisions.

DECISION MAKING PROCESS:

35. Use Technology to Mine Data: Once information is captured and processed, many people in an organization will need to analyze that information to perform various decision-making tasks. This data can be stored in a database which can make it simple for users to retrieve it from the database onto their computers to make quick decisions. With the help of a data manipulation subsystem, users can be in a position to add, change, and delete information on a database and mine it for valuable information. Data mining can help you make business decisions by giving you the ability to slice and dice your way through massive amounts of information.

36. Use Technology to Support Group Decision-Making: Information technology brings speed, vast amounts of information, and sophisticated processing capabilities to help groups use this information in the process of making decisions. Information technology will provide your group with power, but as a group, you must know what kinds of questions to ask of the information system and how to process the information to get those questions answered. To make all processes simple, you can use a group decision support system (GDSS), which facilitates the formulation of and solution to problems by a team. A GDSS facilitates team decision-making by integrating things like groupware, DSS capabilities, and telecommunications.

HOW TO USE TECHNOLOGY IN YOUR CLASSROOM

37. Visual Illustrations: Teachers can use technology in the classroom by integrating visual illustrations while teaching. Many times students get bored with the normal text-based learning process. It is very easy to lose interest in text rather than images or videos. Teachers can use advanced smart whiteboards and projectors to derive live visual 3D images and videos. These smart boards can also access the internet, so teachers can use websites like YouTube, Google Images, and Pinterest, to derive visual examples about any subject. Students will enjoy learning in this form and they can easily remember each point explained using visual images. Teachers can also tell students to use these smart whiteboards to explain points to their fellow students, some students learn better when taught by a fellow student.

38. Create a classroom blog: This might sound advanced to some teachers or students, but it is very simple to own a classroom blog. You can use free blog hosting services like Blogger.com and wordpress.org. With these free blog hosting programs, you will not need to worry about domain renewals or website hosting, all services are free, just because, your classroom blog will be hosted under a free domain name, for example (myclassroom.wordpress.org). Teachers can post coursework on these blogs, post assignments, or create debate topics which will require students to carry out the debate using commenting systems like Disqus.com

39. Video games to solve puzzles: Students can learn through educative video games or puzzles, and subjects like English and Math can be taught using video games and puzzles. Teachers can create game challenges among students and reward points to winning students or groups. If the classroom has computers and the internet, the teacher can tell their students to form small groups of 3-4 students per group, and the teacher assigns each group a challenge. This can make learning fun and students will learn better.

40. Use computers to Improve writing skills: Teachers can tell their students to write sentences or classroom articles which can be shared with the classroom. Computers have advanced word processing applications that can be used in the writing of articles, this word processing application has an inbuilt dictionary that can auto-correct spelling mistakes and also suggest correct English terms. During this process of article writing using a computer, students get to learn how to spell, how to type, and how to compose an article. Maybe this is the reason why we have so many bloggers nowadays.

41. Encourage Email Exchange: Teachers can encourage their students to exchange email contact with their friends in the classroom and with other friends from other schools. This process helps students create relationships with students who take the same classes as them, and these students can exchange academic information like past exam papers or homework assignments which can help them learn and socialize with relevant friends. Also, teachers can communicate with their students using email, which in return creates a strong bond between teachers and students.

42. Create podcast lessons: Some teachers might find this difficult because it requires time to record podcast messages or lessons, but once composed, the teacher will have more time to do other educational activities. Once a podcast lesson is recorded, it can be uploaded on a classroom blog where students can download it and store it on their smartphones or tablets. Podcast lessons are convenient because a student can listen to a podcast lesson while doing housework.

43. Use text message reminders: Many times students get overwhelmed by the amount of classroom work they have to complete, and the endless tests and exams they have to do. Sometimes they even forget to attend some lessons or they submit coursework when it’s too late which affects their end-of-term grades. Teachers can use mobile phone applications like remind101.com, to create text messages which remind their students to submit coursework or to prepare for a test.

IN THE BANK:

44. Use of Plastic Money Cards: Technology has played a big role in changing the face of the banking industry. Unlike in the past when you had big sums of money and got exposed to the risks of moving with lots of cash, today, plastic money cards are being used to make transactions of any kind. Many banks have VISA CARDS or CREDIT CARDS which can be used worldwide to purchase products or make payments of any sort. The same smart money cards can be used online because all e-commerce websites accept payments with Visa or Debit cards. When the owner of the VISA CARD makes a purchase, that money will be transferred from their bank account to the merchant’s account, so the all process of exchanging money is electronic.

45. Use of Mobile Banking Services: This service has helped many developing countries in ASIA and AFRICA. Many banks and information technology companies have enabled people in third-world countries to use mobile phones as banking tools. In most rural areas, their no banks simply because they have poor infrastructures. So BANKS and telecom companies have invested money in mobile phone banking services which enable people to transact business using mobile phones. Users of these mobile money services can save money using a mobile phone or withdraw cash using a mobile phone via a mobile money agent in their area. Top mobile phone service providers like Airtel and MTN, have played a big role in facilitating mobile banking in AFRICA and ASIA

46. Use of Electronic Banking: Many banks have simplified the way their customers access personal account information and transfer money from one account to another. Banks use Internet technology to enable their customers to request bank statements or transfer money. This has made the banking industry so flexible and it also saves time and money. Since most of this work is done by web technologies and other banking technologies, banks cut costs on human labor which increases their profit returns.

BUSINESS ORGANIZATION:

Technology can be used in various ways to facilitate business organization. For example, technology can be used to organize information, it can be used to aid data transfer and information flow within an organization, and technology can be used to process, track, and organize business records. Without technology most businesses would be a mess, just imagine going through the trouble of writing data on paper and keeping large piles of files of data. So in my point of view, technology helps businesses operate effectively. Below I have listed summarized points on the use of technology in business organizations.

47. Use Technology to Speed up the Transfer of Information and Data: The rate at which information flows within a business will determine how first things get done. If the flow of information from one level to another is slow, the productivity of the business will be slow and inefficient, customers will not be served on time and this can harm a business or even give a chance to its competitors to gain strength in the market. But if information can move easily and fast, business managers and employees will find it easy to make decisions, customers will be served on time and the business will gain a competitive advantage. So how does technology facilitate information flow within a business? A business can use technological tools like intranet networks to aid the flow of information within the business, they can also use external networks which require a public website and email to facilitate the flow of information within and out of the business, customers can use email or website contact forms to make inquiries or orders. Also, businesses can use centralized data systems to improve the storage of data and also grant remote or in-house access to this data. Banks use centralized data systems to spread information to all customers via their local bank branches, ATMS, internet, and mobile phones.

48. Use Technology to Simplify communication in an organization: For any organization to be organized and efficient, they have to use communication technology tools like emails, e-fax machines, videoconferencing tools, telephones, text messaging services, internet, social media and so much more. Communication in a business is a process and it also helps in the transfer of information from one level to another. For a business to stay organized and serve its customers well, it has to use effective communication tools. The customer service departments must be in a position to solve customers’ problems on time, orders are supposed to be fulfilled on time. Business managers can use technology to easily allocate work to specific employees on time.

49. Use Technology to Support Decision Making: Since technology makes the transfer of information fast and it also simplifies communication, employees and business managers will always find it easier when they want to make quick decisions. To make decisions, employees will need approved facts about a subject or any customer in question. For example, if an accountant wants to know how much money customer X owes the company, they will have to retrieve data from a centralized database within that organization on that specific customer, this data will show the spending and purchasing patterns of customer X if this data was stored using an accounting software, the system will clearly show appropriate figures. This will save the accountant and customer X time and it will also help the accountant make a quick decision based on facts.

50. Use Technology to Secure and Store Business Data: Just imagine a business where you have to record everything on paper, and then file each paper, that would be a waste of time and resources, and also this data will not be safe, because anyone will have access to those hard copy files. But with technology, every process is simplified. I remember when I was still working for a computer service company, every engineer had an account on an internal database for the company, so they could access electronic Job cards which they had to fill whenever they finished a job, this data could be accessed by the workshop manager and he could follow up on the customers to ensure that the job was done right. When a Job card was submitted for review, engineers would have no permission to re-edit the data, this permission was left to the workshop manager, so the process seemed organized and secure. Many businesses are using internal databases and networks to simplify data transfer and also ensure that their data is well-secured and stored.

AGRICULTURE:

53. Use Technology to Speed up the Planting and Harvesting Process: Preparing farmland using human labor can take a lot of time, so many large-scale farmers have resorted to using technological tools like tractors to cultivate and prepare farmland. After this process of preparing the farmland is done, farmers can still use technological tools like Cluster bomb technology used for seed planting. Then when these crops grow and reach the stage when they can be harvested, the farmer will use another technological tool for harvesting such as a combine harvester. As you have seen, the all process of preparing the field, planting, and harvesting is done by machines. This can be expensive for small-scale farmers, but large-scale farmers will save time and money in managing the all process.

54. Irrigate crops: Farmers in dry areas that receive little rain use technology to irrigate their crops. Water is a very essential factor in plant growth, it contributes almost 95% towards plant growth, even if the soils are fertile, or when the plants are genetically engineered to survive the desert conditions, still water will be needed. Farmers can use automated water sprinklers which can be programmed to irrigate the farm during specific periods during the day. Water pipes can be laid across the farmland, and water sprinklers can be scattered all over the farm getting the water from the water pipes, farmers can add some nutrients to this water, so that as plants get irrigated, they also get some important nutrients which can enhance their growth.

55. Create disease and pest-resistant crops: Genetic engineering has enabled scientists to create crops that can be resistant to diseases and pets. They have also succeeded in engineering crops that can survive in desert conditions and this has helped many farmers in drought areas like Egypt to grow various cash and food crops which in return boosts their income. However, some farmers have been reluctant to use these genetically engineered crops because they fear that they can damage their farm soils and also these genetically engineered crops do not produce seeds that can be harvested and planted again. To some extent this is true, in my country, I have seen these engineered oranges, they look so nice and they are big, but they have no seeds, so if you want to replant it, you have to go and buy a full-grown plant which is expensive some times and this all process does not sound logical when it comes to farming.

AT YOUR HOME:

56. Home Entertainment: Technology has completely changed the way we entertain ourselves while at home. Many advanced entertainment technologies for homes have been invented and this has improved our lifestyles at home. Home entertainment gadgets like 3D – HDMI televisions which show clear images have improved the way we enjoy movies, video games which keep our kids entertained all the time while at home and some video games are educational so our kids tend to solve puzzles while having a fan at home, advanced home theater systems for playing clear music live from iTunes music store, fast broadband internet we use to stream Youtube videos on pads, electric guitars, and pianos we use to play our music.

57. Use it to improve Home Security: Technology is being used to improve on our home security. Home spy technologies will enable you to keep track of what is going on in your home while at work or on holidays, this spy technology can be installed on your smartphone device or tablet, and then connected to the spying webcam device at home using the Internet. You can also use hardware home alarm systems, which can be triggered when something wrong happens at home. For example, the alarm system can be connected to report any forced entry into your home, or it can be set to report a fire outbreak in the house.

58. Save Energy: With the increasing costs of living, it has become a must to use technology to save energy. Since we use power to do almost anything at home, it is advisable to opt for low-cost energy-consuming technological tools. If you apply energy-saving electronic gadgets in your home, your bills will be cut down by half. For example, you can replace your electric cooker with a gas cooker, gas is cheaper than power, you can use energy-saving bulbs, you can use a solar water heater, use can put all your home lights on solar power.

IN ACCOUNTING:

59. Data Security and storage: Accounting as a process deals with analyzing the financial data of a business or an organization. Technology helps in keeping this data safe to ensure that it can be retrieved at any time by employees in the financial department. Financial information is very sensitive, so it can not be accessed by anyone in the business or organization. It is only people with experience in accounting have access to this data, so securing this data on an encrypted data server is very important. Many accountants have undergone special technological training so that they learn how to use various accounting technologies like computers and accounting software.

When it comes to data storage, many technological companies like Dell, Microsoft, and Apple have devised high-end data servers that can store sensitive data for their clients, in this case, these servers are heavily protected from experienced hackers who can take advantage of your financial data.

60. Use Technology to be accurate: Unlike humans accounting software and computers are more accurate as long as they are used in the right way. Accounting deals with detailed data and record keeping. Data processed during the accounting process is used to project business growth and it also helps in making decisions in an organization. Technology has so far proved very effective when it comes to data processing and storage. Simple technological tools like calculators and computers are used in almost every business. Also, technology reduces the number of errors during the process of analyzing data, humans can not deal with figures and data for a long period without making any errors.

IN AFRICAN SCHOOLS

61. Use Computers in African classrooms: Africa has been left behind for years, but now technology is spreading all over the world, and African schools have started using technology in their Curriculum which has brought excitement to African students. Some African schools have created computer labs where students get taught basic computer skills like typing, using the internet to do research, and using educational video games to solve academic puzzles. This process has improved the way students learn in African schools. Big organizations like UNICEF have facilitated the One Computer per Child program which ensures that African children get access to computers and also learn how to use them.

62. Replace blackboards with smart boards: Not all schools have managed to achieve this, because of the poor infrastructure in Africa. Most schools have no power, yet these smart boards use power. However, some urban-based schools have the opportunity to use smart boards in the classroom. Students can learn easily via these smart boards because teachers use visual illustrations derived directly from the internet. Some African teachers use real-time YouTube videos to derive examples on specific subjects, and this has helped African students learn easily and also get exposed to more information. Read more about the use of ICT in schools without electricity here worldbank.org

63. Use the Internet in African schools: The Internet is a very crucial information technology tool that helps in the search for information. Most urban-based African schools have free wireless internet and some have internet in their computer labs. This internet helps students do educational-related research, some of these students have also found great use of social networks like Facebook.com to connect with their former schoolmates and they also use social sites like ePals.com to get educational information shared by students from various schools. Internet technology has also helped students in Africa study online, for example, accounting courses like ACCA can be done online and this has helped African students get access to advanced educational courses.

IN A BAKERY:

64. Use Temperature Sensors to Monitor Room Temperature in a Bakery: Bakeries can use technology to monitor the temperature of baking rooms. The quality of bakery products like bread or cakes will be determined by time and temperature. If the temperature is too high or too low, the products will be of low quality. So most advanced bakeries have employed automatic temperature sensors that can report any temperature drop or rise. These temperature sensors can send information to the bakery operator and they will act immediately if there is any temperature change within the baking room. Every baked product has a specific temperature under which it has to be baked, for example, the amount of heat used to bake bread is not the same heat used when baking a cake. However, it is very difficult for humans to measure temperature regularly, so this process can be managed by technology.

65. Use Technology to Produce quality bakery products: Technology can be used in a bakery to produce high-quality products. We have seen that electronic sensors can monitor the temperature of baking rooms and they can also determine the time when these products should be in the baking machine. The process is automated and it is perfect, so you will see that bakeries that use this technology always have the best products. We also have the Hefele turbo flour sifting machine which helps in cleaning the flour. The flour will be fed into the rotating sifting wall and it is not exposed to any grinding effect, which means the flour will be cleaned but it will not lose its texture nor can it heat up. This automation process will help save time and also increase production:

IN A CLOTHING BUSINESS:

66. Inventory: A clothing company needs to keep track of its inventory, because, if they run out of a hot-selling fashion trend, the business can suffer a big loss. So sales managers have to use inventory tracking software to know which fashion trend sells more and how many people are demanding that trend. With this information, the cloth store manager will stock only the top most selling fashion trends on demand which will increase sales. This inventory tracking software will save the cloth business owner time and money. In simple business economics, demand must equal supply.

67. Point of Sale: Since clothing stores transact full-time sales, they need to use technology to help them in cash registering and order tracking. Many advanced clothing stores are using cash register software which helps in adding total sales per day, calculating tax, processing coupon codes, and scanning item bar codes they also help in updating inventory records after each purchase. Also, stores are using technology to simplify the way customers pay for items, for example, most clothing stores accept smart card payments like debit cards, and they also operate online stores that accept PayPal payments. This improves their customer service and it also results in increased sales.

68. Promotion: If you look at the clothing business, it has a very high level of entry, which means that competition has to be very high. So for any clothing store to break through, they have to use technology to gain a competitive advantage over other clothing stores. For example; a clothing store can create an online store and socialize that store with top social networks like Facebook, then give away free shopping coupons to its fans on Facebook. Also, fashion social networks like Pinterest can help a small clothing store promote itself and give away shopping gifts to Pinterest users. This process will not cost a small clothing store more than $1000, yet it will gain them a big audience of fashion lovers and also increase their sales. Look at small fashion e-commerce sites like nastygal.com which has gained a competitive advantage over Amazon.com in the fashion world.

IN ARCHITECTURE

69. Use Web Technologies: Many architectures use web technologies like email, and the internet, to perform various tasks. Web technologies are being used in transferring information, storing data, filtering, and securing data, all this enables architectures to organize and easily access architectural data. Architectures can use shared screen software like Skype, to discuss drawings with other parties or their customers. Video conferencing tools can be used to discuss a project with clients and this simplifies the way architectures do their job. Also, a website can be created to showcase past works done by these architectures, this website can be used to acquire new customers.

70. Use Computers to Make Drawings: Most architects find great use of computers during the process of making sketches of their drawings. Computer-aided design software (CAD) is being used during the process of making sketches. Then the architect can use a projector and a smart board to share a sketched plan with their workmates to suggest areas of improvement. Also, computers can be used as storage devices for all architectural works. It is always advisable to keep a soft copy of the final architectural drawing, and then use the printed copy for on-site development.

71. Use Large Format Printers to Print Out Drawings: After using a computer to sketch any drawing, the architect will need to use a large format printer to print out a hard copy of that sketch. In most cases, they use 24 x 36 large papers for these print-outs. Many copies can be made so that some are sent to the customer and others are shared with fellow architects to suggest areas of improvement.

72. Use Digital Cameras: Before the architect starts sketching a new plan for any construction, they will need to take pictures of the building or ground to be worked on; this helps them during the process of planning a new design. Photographs do help architects remember important site characters that can be referred to when creating a new design.

73. Use Laser Measuring Tools: Every architect will need good measuring tools to ensure the accuracy of their work. Laser measuring tools are more accurate compared to a normal ruler, though some architects might prefer an ordinary ruler while drawing sketches on paper. These measurements are very important because construction engineers will base on those quoted measurements to build a standard infrastructure.

IN ART AND DESIGN:

74. Use technological tools to create sculptures: Artists use technological tools to create sculptures and other art pieces. For example, a flexcut Mallet Tool can be used to shape large amounts of wood, these mallets come in different sizes and each mallet will have its role during the process of wood carving. Each mallet tool has a high-carbon steel blade that is attached to an ash handle. Other tools can include shaping and patterning sculpting thumb, Loop and ribbon tools, and many more. Buy a sculpting tool here dickblick.com

75. Use the Internet to Market Art Work: Before we started using the Internet, it used to be very difficult for good artists to market their creative works, most artists would die before selling their masterpieces, and museums would take the role of hunting and discovering these great artworks. Today, internet technology enables artists to showcase their great artworks online. Some social-based networks like www.500px.com allow artists to showcase their works in the form of photography and they also enable them to sell these works via this network. However, some artists do not want to expose their works online because they fear that someone might take advantage of their creativity and copy their art pieces.

76. Use technology to get Inspiration: Art is aided by inspiration; once an artist is exposed to various experiences, their brain will create an art fact out of that experience. So the internet helps in this process of creating ideas. A lot of information is published online in the form of videos or pictures, so artists can use this data to create meaningful art pieces. Also, young artists use the internet to study the works of professional artists who are beyond their reach. Some museums have published these art facts in the form of pictures online, so any artist can access this data from anywhere and learn some basics from great artists.

77. Use laser sensors to secure art pieces in museums: Art theft is on the increase because of the abnormal prices paid for these art pieces. According to The New York Times, an original Pablo Picasso’s Nude, Green Leaves, and Bust cost $106.5 million, so this attractive value of just a single art piece will attract art thieves to any museum with this item. This has forced art museums to use electronic laser sensors that can detect movement and sound, then they trigger alarms to alert armed guards if anyone tries to get close to them. To some extent, this has helped in securing some great artworks.

IN BADMINTON

78. Use Advanced Badminton Rackets. Badminton is a cool game with many fans; technology has also helped to advance the badminton game. Advanced Badminton rackets help players enjoy the game and play for longer hours. The newly advanced rackets have a lighter frame, which makes them so flexible during the game. These frames are made of elastic particles mixed with carbon fibers which enable the player to swing faster and the racket puts less pressure on the players’ hands. Also, these newly advanced rackets have shock-less grommets, these grommets will not tear or pop out during the game due to the pressure of the ball on the racket and this improves the game of badminton.

IN BASKETBALL:

79. Use Basketball Shoe Technology: In most cases when you think of sports shoes, you will not think of how technology can be used in the development of basketball shoes. However, basketball is a motion sport that requires players to jump up and down, so the types of shoes they have to wear have to be in a position to support these movements. When these basketball shoes are being manufactured, the design is focused on making them light and breathable so that they support the players well and prevent any injuries that might occur during the process of jumping. These basketball shoes prevent injuries in various ways, for example, they provide adequate ankle support and they also lace up to the top which provides a snug fit. Though these technologically advanced basketball shoes come at a high cost, all NBA players are advised to wear them and players are supposed to have more than one pair because playing in one pair all the time will cause the sneakers to wear out and they will start putting pressure to the player's ankle and feet which might expose them to injuries during the match.

80. Use broadcasting technology: Many basketball fans have been in a position to watch live NBA basketball games while at home on their televisions. Well-known cable television companies like CBS INTERACTIVE have managed to broadcast these NBA basketball games live using their advanced 3D broadcasting technologies. As a fan of basketball, I have benefited from this technology, because I have no worries about missing my favorite game.

BEAUTY SALONS:

81. Marketing: Unlike in the past when your next-door beauty saloon was only known by that community, today things have changed, Beauty salons have adopted the social marketing fever and many of them are using social networks like Facebook, Yelp, and Foursquare. The most important social networks for these local businesses are Yelp.com and Foursquare.com. With Yelp.com, customers can post reviews on any beauty salon within their location, then users of Yelp can base on those reviews when searching for recommended beauty salons within their location. Then foursquare.com will suggest beauty salons your friends on Facebook like. For example, if a beauty salon owns a Facebook page and promotes itself well to targeted users, its customers will click the Like Button if they appreciate the services being offered at that beauty salon, so if your friend Likes a specific salon within your location, the next time you use foursquare, they will use the data from Facebook and suggest beauty salons liked by your friends within that particular location. So Foursquare will act as a localized recommendation service powered by your friends ‘’LIKES’’.

82. Use SMS REMINDERS: Due to the huge competition in the beauty salon business, it is advisable to stay in touch with your client all the time at an affordable cost. If you want to gain a competitive advantage and increase on the number of customers for your beauty salon, you will need to integrate SMS technology into your business. Launch SMS campaigns with offers to your already verified customers and also promote incentives to new customers using SMS advertisements. It is very cheap to send bulk SMS, all you need are the contacts of your clients and other targeted customers. Satisfy your customers by offering the best service and also keep them engaged all the time. Customer service is a very crucial factor in business growth. Some of the services you can offer via SMS include appointment reminders, holiday promotions, coupons for discounts, suggesting new styles for your clients, and much more.

83. Use Salon Management Software: This SMS ”salon management software” will help you manage your salon effectively, this software can help you manage appointments, financial records, manage inventory and also manage payrolls. With this software you can be in a position to build a list of clients and track their spending patterns whenever they come to your salon, this will help you customize packages for them which will improve your customer care service. The cost of this software varies depending on the functions and needs of a client, so you have to specify what you need, but in most cases, a standard SMS with basic features can cost you like $100 only. If you want to try this software out, visit melissamsc.com

BIOLOGY CLASSROOM

84. Use Visual Illustrations: Biology teachers have found great use of technology in their biology classrooms. Biology teachers use smart boards and projectors to derive live examples in visual form in the classroom. Biology is more of a practical subject, where for students to understand some biological concepts, images, and video illustrations have to be used. Let’s assume students are learning about the human heart and the way it works. Text or verbal explanations will not work well in a lesson like this, so the teacher has to use a smart whiteboard to show visual illustrations of how the human heart functions.

HOW TO USE TECHNOLOGY IN CAREER COUNSELING

85. Use Computers in Career Counseling: Choosing the right career for your future is a big decision, in many cases, we make decisions based on facts, and computers are good technological tools that can be used to store and analyze facts. Many career counselors use computers to show their clients facts about specific careers, so both the counselor and the client will go through the data which might include several people competing for that particular career in the market, how many companies are in a position to cater for that career, how much money is paid, challenges and opportunities of that specific career. To simplify the job of career counselors, they use computer guidance systems, for example; the ” System for Assessment and Group Evaluation (SAGE), Computerized Career Assessment and Planning Program (CCAPP),

86. Use the Internet in Career Counseling: Sometimes, you will have no access to a real career counselor; sometimes you might not even have the money to pay for one. So here is where the internet comes into play. You can use the internet to research different careers. Use top search engines like Google.com / Bing.com / Yahoo.com /Ask.com, to get specific information about any career, you can know which companies will hire you, how much money they can pay based on average salaries issued in that company, risks and opportunities involved if you pursue that career, and much more. All this information is available online. You can also try to use job listing portals like indeed.com to search for salaries and jobs within your location.

IN CHURCH :

87. Use the Internet to deliver church Sermons: As the world develops, more and more people are getting busy and they get attached so much to their careers, the cost of living is increasing so Christians find themselves working on Sundays. For churches to keep up with their Christians, they have decided to use the internet to reach masses of Christians across the globe. Well-known pastors like Joyce Meyer are using the internet to reach millions of Christians across the globe (Joycemeyer.org), so as Christians are at work, they can use their smartphones, computers, or tablets like iPads to access spiritual information in the form of videos, audio, or text.