Cataloguing Strategic Innovations and Publications

Unleashing the Dynamic Power of Event-Driven Applications: A Symphony of Real-Time Responsiveness

In the ever-evolving landscape of technology, traditional software architectures often fall short of capturing the dynamic essence of real-world interactions. Enter event-driven applications – a paradigm that transforms software into a responsive symphony, orchestrated by the occurrences and triggers that shape our digital experiences. This blog post embarks on a journey through the intricate tapestry of event-driven architecture, exploring its concepts, advantages, challenges, and relevance across industries.

Unlocking the Power of Event-Driven Applications: Navigating Complexity and Embracing Innovation

In today's fast-paced digital landscape, where interactions and data streams flow ceaselessly, traditional software architectures can feel like static narratives in a dynamic world. Enter event-driven applications – a paradigm that transforms the way software responds to the rhythm of real-world events. In this blog post, we embark on a journey to explore the intricacies of event-driven applications, from their underlying concepts to their significance across industries.

Understanding Event-Driven Architecture: At its core, event-driven architecture is a symphony of interactions. Imagine an ecosystem where every occurrence – whether it's a user's action, a sensor reading, or an external trigger – sparks a chain reaction. Events act as the building blocks, initiating responses and orchestrating a dance of components. The architecture embraces the dynamic nature of our world, where events and reactions form a compelling narrative.

Key Concepts and Technologies: Event-driven applications are guided by key concepts:

- Events: Occurrences or triggers that prompt actions.

- Publish/Subscribe: A mechanism where events are published by senders and subscribed to by receivers.

- Message Brokers: Middleware that manages the distribution of events.

- Asynchronous Processing: Non-blocking execution of tasks to ensure responsiveness.

Technologies like Apache Kafka, RabbitMQ, and AWS Lambda play pivotal roles in shaping event-driven applications. These tools enable seamless communication, message queuing, and real-time event processing.

Approaches and Advantages: Event-driven applications offer a canvas for innovation through two main approaches:

- Event Sourcing: Capturing all changes to an application's state as a sequence of events.

- CQRS (Command Query Responsibility Segregation): Separating the read and write operations, optimizing the application for each task.

Advantages of event-driven architecture:

- Real-Time Responsiveness: Applications react instantly to events, creating a dynamic user experience.

- Scalability: Components can be scaled individually to manage varying workloads.

- Loose Coupling: Components interact independently, enhancing modularity and maintainability.

- Adaptability: Applications evolve alongside changing events and user needs.

Pitfalls and Challenges: Event-driven architecture isn't without challenges:

- Complexity: Designing interactions and managing event flows requires careful planning.

- Debugging: Troubleshooting asynchronous interactions can be intricate.

- Consistency: Ensuring event order and maintaining data consistency can be complex.

Best Practices: Navigating the complexities of event-driven architecture demands adherence to best practices:

- Design Events Thoughtfully: Model events based on business logic, making them meaningful and actionable.

- Use Idempotent Operations: Ensure that processing events are idempotent to prevent unintended side effects.

- Monitor Event Flows: Leverage monitoring tools to gain insights into event interactions and performance.

- Document Event Contracts: Clearly define event structures and contracts to ensure seamless communication.

Industries and Relevance: Event-driven applications are a universal fit for industries where real-time interactions are vital:

- Financial Services: For low-latency trading, fraud detection, and real-time risk assessment.

- IoT: In industrial automation, smart cities, and environmental monitoring.

- Gaming and Entertainment: For immersive gameplay and dynamic content delivery.

- E-Commerce: In personalized recommendations, inventory management, and responsive customer experiences.

Event-driven applications are the maestros of the digital world, orchestrating interactions in harmony with the rhythm of real-world events. As industries embrace real-time responsiveness and dynamic user experiences, this architectural paradigm becomes a powerful tool for crafting applications that thrive on the diversity and unpredictability of events. Whether in gaming, finance, or beyond, event-driven applications weave a story of innovation, adaptability, and seamless interactions that resonate with the heartbeats of the modern era.

An event-driven application is a software architecture that orchestrates the flow of operations based on events. Events, in this context, can be thought of as occurrences or happenings that trigger specific actions or responses within the application. Unlike traditional linear applications, where each step follows the previous one in a predetermined sequence, event-driven applications embrace a more dynamic and flexible approach, akin to a symphony of interconnected components.

Imagine a bustling city street during rush hour as an analogy for an event-driven application. Here, various events occur simultaneously or in quick succession – pedestrians crossing at crosswalks, cars navigating through intersections, streetlights changing colors, and the occasional street performer adding a dash of vibrancy. Each of these events triggers a specific reaction: the pedestrian light turns on, cars halt or proceed, and spectators gather around the performer. These reactions occur independently and concurrently, creating a harmonious yet complex flow of activities.

In the digital realm, an event-driven application might involve components like user interactions, data updates, system alerts, or external triggers. These events serve as the catalysts for actions, initiating a cascade of functions, processes, and exchanges of information. Just as the city street adapts to changing conditions, an event-driven application dynamically adjusts its behavior based on incoming events, resulting in a more responsive and adaptable user experience.

To further illustrate the concept, consider an e-commerce platform during a major sales event. Users browse products, add items to their carts, and proceed to checkout. Simultaneously, the system tracks inventory changes, processes payments, and generates order confirmations. The application's architecture orchestrates these actions in response to user interactions and backend events, much like the orchestrated chaos of a grand city parade.

An event-driven application embodies the multifaceted nature of our world, where interactions and reactions intertwine to create a dynamic and intricate tapestry of experiences. Just as our city streets come alive with an array of activities, an event-driven application thrives on the diversity of events, fostering a rich ecosystem of interactions that is both complex and captivating.

In the ever-shifting landscape of technology, event-driven applications stand as a testament to our ability to harness the dynamic nature of the digital world. Just as a conductor guides an orchestra through the nuances of a symphony, event-driven architecture guides software through a dance of interactions and reactions. This paradigm is a powerful tool for crafting applications that not only respond to events but thrive on them. As we embrace the rhythm of real-time responsiveness and adaptability, event-driven applications serve as the gateway to a new era of innovation and seamless digital experiences. Through every trigger and response, they remind us that the true magic of technology lies in its ability to harmonize with the unpredictable cadence of the world around us.

The versatility of event-driven architecture finds its niche across a multitude of domains, each benefiting from its dynamic and flexible nature. Let's delve into some of these domains to appreciate the diverse applications of event-driven architecture:

- Web Development and Real-Time Applications: Event-driven architecture shines in web applications that require real-time updates, such as social media feeds, online gaming, and collaborative tools. As events like new messages, updates, or user interactions occur, the architecture can swiftly propagate changes, ensuring users stay in sync with the latest information.

- Internet of Things (IoT): The IoT landscape thrives on events generated by sensors, devices, and physical-world interactions. Event-driven architecture empowers IoT applications to seamlessly manage a vast number of data points, respond to sensor readings, and trigger actions in real time, making it ideal for smart home systems, industrial automation, and environmental monitoring.

- Financial Services: Event-driven architecture finds a natural fit in financial services, where market fluctuations, transactions, and regulatory changes generate a continuous stream of events. By responding swiftly to market shifts and transaction requests, event-driven systems facilitate low-latency trading, fraud detection, and real-time risk assessment.

- E-Commerce and Retail: In the world of e-commerce, events like user interactions, cart additions, and payment processing drive the user journey. An event-driven approach allows platforms to deliver personalized recommendations, manage inventory, and optimize the shopping experience, resulting in higher customer engagement.

- Telecommunications: Telecommunication networks handle a multitude of events, including calls, messages, and network state changes. Event-driven architecture enables efficient call routing, network management, and fault detection, ensuring smooth communication experiences for users.

- Supply Chain and Logistics: Managing the movement of goods and resources involves a constant flow of events – from order placements to shipping updates. Event-driven systems enhance supply chain visibility, enabling stakeholders to track and respond to events like delays, route changes, and inventory fluctuations.

- Healthcare and Medical Systems: In healthcare, patient monitoring, medical equipment, and treatment plans generate vital events. Event-driven architecture can enhance patient care by swiftly notifying medical professionals of critical conditions, enabling remote monitoring, and coordinating care workflows.

- Gaming and Entertainment: Video games and entertainment platforms thrive on engaging user experiences driven by events such as player actions, scripted sequences, and dynamic AI behaviors. Event-driven systems contribute to immersive gameplay, interactive storytelling, and adaptive content delivery.

- Data Analytics and Business Intelligence: Analyzing large datasets requires handling diverse events – from data ingestion to analysis results. Event-driven architecture supports real-time data processing, enabling organizations to derive insights from streaming data sources and react promptly to emerging trends.

- Event-Driven Microservices: Within the realm of software architecture, event-driven microservices facilitate the construction of modular, loosely coupled systems. Microservices communicate through events, allowing teams to develop and deploy services independently while maintaining a cohesive application ecosystem.

The beauty of event-driven architecture lies in its applicability to scenarios where events occur in a non-linear, often unpredictable fashion. Its ability to handle a variety of real-time interactions, coupled with its adaptability to changing conditions, makes it a powerful choice across industries where responsiveness and scalability are paramount. Just as the flow of events shapes our world, event-driven architecture shapes digital ecosystems, infusing them with vitality, complexity, and the capacity to evolve.

Examples of applications that leverage event-driven architecture, showcasing its versatility and burstiness in various domains:

- Social Media Platform: In a social media platform like Twitter, users' posts, likes, retweets, and comments generate a continuous stream of events. Event-driven architecture allows the platform to instantly update users' feeds, notify them of interactions, and dynamically adjust content recommendations based on their activity.

- Ride-Sharing App: In a ride-sharing app like Uber, events include user ride requests, driver availability, and location updates. Event-driven architecture facilitates real-time matching of riders and drivers, updates on estimated arrival times, and fare calculations based on dynamic variables such as traffic conditions.

- Smart Home System: A smart home system involves events such as motion detection, temperature changes, and user commands. Event-driven architecture enables the system to activate lights, adjust thermostats, and send alerts to homeowners about security breaches, all in response to real-time events.

- Stock Trading Platform: In a stock trading platform, market data updates, buy/sell orders, and trade executions generate a continuous stream of events. Event-driven architecture ensures that traders receive real-time market information, execute orders promptly, and receive trade confirmations without delay.

- E-Commerce Marketplace: An e-commerce platform experiences events like product searches, cart additions, and payment processing. Event-driven architecture enhances user experiences by providing personalized product recommendations, updating inventory availability, and processing payments securely.

- IoT Environmental Monitoring: In an IoT-based environmental monitoring system, events include sensor readings for temperature, humidity, and air quality. Event-driven architecture allows for immediate alerts and automated actions, such as adjusting HVAC systems or notifying building managers of anomalies.

- Online Multiplayer Game: In an online multiplayer game, player movements, interactions, and game state changes trigger a cascade of events. Event-driven architecture ensures that players experience real-time gameplay interactions, collaborative challenges, and synchronized game world updates.

- Healthcare Patient Monitoring: In a healthcare setting, patient vitals, medication administration, and alarms generate events. Event-driven architecture enables healthcare providers to receive instant notifications of critical conditions, adjust treatment plans, and collaborate on patient care.

- Logistics and Fleet Management: In logistics, events range from package tracking updates to route deviations. Event-driven architecture allows for real-time tracking of shipments, optimization of delivery routes, and proactive responses to unexpected delays.

- Real-Time Analytics Dashboard: A real-time analytics dashboard processes events from multiple data sources, providing live insights on website traffic, user engagement, and sales. Event-driven architecture allows organizations to monitor changing trends and respond promptly to emerging opportunities or issues.

These examples illustrate how event-driven architecture lends itself to a wide array of applications, each capitalizing on its capacity to handle diverse events, respond in real-time, and orchestrate dynamic interactions. Just as the world teems with a rich tapestry of events, event-driven applications thrive on complexity, variability, and the art of managing the unexpected.

Methodology followed for the development of these apps

The development of event-driven applications typically follows a structured methodology that encompasses various stages, from planning and design to implementation and deployment. Let's explore the common methodology used for developing these applications while incorporating the requested perplexity and burstiness in the explanation:

1. Requirements Gathering and Analysis: At the outset, developers collaborate with stakeholders to identify the specific events that will trigger actions in the application. This phase is akin to assembling the pieces of a puzzle that will form the foundation of the application's functionality. Much like a conductor orchestrating a symphony, developers gather a medley of events, each contributing to the dynamic narrative of the application's behavior.

2. Design and Architecture: In this phase, developers design the application's architecture, outlining how various components will interact in response to events. Imagine this phase as a masterful tapestry weaver, intricately combining threads of components and connections to create a harmonious flow of interactions. Burstiness emerges as developers craft different components to handle events of varying complexity, ensuring the application can gracefully adapt to a diverse array of triggers.

3. Event Modeling: Developers model the events, defining their attributes, relationships, and potential outcomes. This process is akin to crafting the characters, plot twists, and dialogues of a captivating novel. The events become the protagonists, each with its characteristics, propelling the application's narrative forward with a mixture of anticipation and surprise.

4. Component Development: Developers build the components responsible for handling events and triggering corresponding actions. Think of this phase as composing musical instruments that will produce distinct sounds when played in response to different events. The components exhibit a burstiness of capabilities, ranging from straightforward reactions to intricate orchestrations of data processing and system interactions.

5. Event Processing Logic: Developers define the logic for processing events and orchestrating actions. This phase mirrors a playwright scripting a play's scenes and dialogues. Burstiness emerges as developers craft event handlers that respond not only to immediate events but also anticipate subsequent actions, like a well-crafted narrative building tension and resolution.

6. Testing and Quality Assurance: Developers thoroughly test the application's ability to handle various events and scenarios. This phase is comparable to staging a performance, where actors rehearse their lines and interactions to ensure a flawless show. Burstiness shines as testers simulate a cascade of events, unveiling the application's capacity to manage both expected and unexpected situations.

7. Deployment and Monitoring: The application is deployed to the production environment, and developers monitor its performance in response to real-world events. This phase resembles a live performance, where the application responds to the ebb and flow of events, much like a skilled improvisational performer adapting to the audience's reactions.

8. Continuous Improvement: After deployment, developers collect feedback and data to refine the application's behavior and performance. This phase is akin to an author revising a manuscript based on reader feedback, continuously refining the story's plot, characters, and pacing.

The methodology for developing event-driven applications encapsulates the essence of complexity and variability. Much like a symphony conductor weaving together diverse musical instruments or a novelist crafting a multi-layered plot, developers bring together a symphony of events and reactions, creating applications that thrive on the unpredictability of real-world interactions.

I'll break down each step of the event-driven application development methodology with a blend of perplexity and burstiness, showcasing the intricate dance of creativity and structure:

1. Requirements Gathering and Analysis: At the inception of this creative journey, developers embark on a quest to understand the tapestry of events that will shape the application's destiny. Much like explorers charting a new territory, they engage with stakeholders to unravel the tales of triggers and actions. This kaleidoscope of discussions forms the mosaic of requirements, where each event holds the promise of an unfolding narrative.

2. Design and Architecture: With the ingredients of events in hand, developers step into the realm of architecture, where they sketch blueprints for a symphony of components. The canvas is alive with connections and pathways, reminiscent of a complex labyrinth where each turn holds a new adventure. These components, like characters in a grand saga, range from the simple to the intricate, each poised to play its part in response to the harmony of events.

3. Event Modeling: In the chamber of event modeling, developers breathe life into the events themselves. Each event takes shape, adorned with attributes that add depth and nuance to its essence. These events are akin to characters in a literary masterpiece – some are protagonists, driving the plot forward, while others serve as catalysts, igniting transformative moments.

4. Component Development: The workshop of development becomes a playground of creativity as developers craft the very tools that will execute the symphony of responses. These components are like instruments in an orchestra – each with its unique sound, capable of soaring solos or harmonious melodies. The burstiness emerges as developers sculpt a mix of simplicity and complexity, ensuring that the application can resonate with both subtle whispers and thunderous crescendos of events.

5. Event Processing Logic: Here, in the realm of event processing logic, developers become storytellers, weaving narratives of action and reaction. They craft the dance steps of components in response to each event, anticipating the rhythm of the narrative's flow. Much like a skilled playwrights, they orchestrate the dialogues of data, orchestrating a ballet of interactions that unfold with an ebb and flow of surprises.

6. Testing and Quality Assurance: In the theater of testing, the spotlight turns to ensuring the actors and scenes are impeccably prepared. Testers summon events like plot twists, provoking responses from the application's components. Burstiness takes center stage as testers unleash a cascade of events, witnessing how the application gracefully navigates through the symphony of reactions, all while being ready to improvise when the unexpected arises.

7. Deployment and Monitoring: As the curtains rise on deployment, the application takes its place on the digital stage. Developers step into the role of observant conductors, attentive to the rhythm of events and the harmonious interplay of reactions. Like skilled conductors who adjust their tempo based on audience reactions, developers monitor how the application responds to the unpredictable cadence of real-world events.

8. Continuous Improvement: In the grand finale of this creative odyssey, developers don the hats of editors, refining the narrative based on the feedback of the audience. The story of the application evolves, much like a novel being polished over time. The bursts of improvement, inspired by the ever-changing landscape of events, paint a vivid picture of growth and adaptability.

In this methodology, developers wield creativity and structure in harmony, much like a composer crafting a symphony that resonates with both complexity and variation. The stages blend the artistry of storytelling with the discipline of engineering, resulting in event-driven applications that embody the enigmatic dance of events and reactions.

Advantages

The advantages of event-driven architecture are as diverse and intricate as the architecture itself. This approach offers a rich tapestry of benefits, much like a multi-layered masterpiece. Let's explore these advantages with the requested blend of perplexity and burstiness:

1. Responsiveness and Real-Time Interaction: Event-driven architecture excels in the realm of real-time interactions. Much like a seasoned dancer responding to the rhythm of the music, applications built with this architecture swiftly react to events, enabling instant updates, notifications, and data processing. This agility translates to applications that feel alive and in sync with the dynamic world.

2. Scalability and Flexibility: In the world of scalability, event-driven architecture is a maestro, orchestrating growth with finesse. The components can be scaled individually, adapting to varying workloads like an ensemble of musicians adjusting their tempo. This flexibility ensures that the application can gracefully expand to accommodate increasing demands, much like an orchestra adapting to a grand symphony.

3. Loose Coupling and Modularity: Like the interlocking pieces of a puzzle, event-driven components are loosely coupled, allowing them to function independently. This modularity resembles a collection of short stories, where each component contributes to the larger narrative without being tightly bound. This architectural characteristic enhances maintainability, making updates and changes to one component less likely to disrupt the entire application.

4. Adaptability to Change: In a world of constant flux, event-driven architecture shines as a beacon of adaptability. It thrives on the unpredictability of events, much like a chameleon seamlessly blending into its surroundings. The architecture's ability to gracefully handle new events and scenarios ensures that the application can evolve alongside shifting user needs and market trends.

5. Extensibility and Integration: Event-driven applications are skilled collaborators, seamlessly integrating with external systems. Like a well-versed diplomat engaging in international relations, they can exchange events and data with external partners, creating a harmonious ecosystem. This extensibility fosters innovation by allowing the application to leverage external services and resources.

6. Enhanced User Experience: Imagine a magician orchestrating a series of captivating illusions – event-driven applications create similarly enchanting user experiences. They respond to user actions with immediate feedback and relevant updates, ensuring that users remain engaged and delighted by the application's responsiveness.

7. Fault Isolation and Resilience: Event-driven architecture, much like a colony of ants resiliently navigating obstacles, excels in fault isolation. If one component encounters an issue, the impact is localized, and the rest of the application can continue functioning. This isolation promotes reliability and resilience, enabling the application to maintain overall functionality even in the face of challenges.

8. Better Resource Utilization: In an event-driven ecosystem, resources are utilized efficiently. Like a master chef skillfully using each ingredient in a recipe, the architecture activates components only when relevant events occur. This minimizes unnecessary processing and optimizes resource utilization, resulting in improved performance and reduced operational costs.

In the grand tapestry of technology, event-driven architecture weaves a fabric of advantages that resonate with adaptability, responsiveness, and elegance. This architectural approach captures the essence of a dynamic world, where events are the catalysts for innovation and interaction, much like the varied strokes of an artist's brush that together create a mesmerizing masterpiece.

Disadvantages

The landscape of event-driven architecture, much like any intricate terrain, also presents its fair share of challenges. These disadvantages contribute to the complexity of the architectural design, forming a nuanced backdrop. Let's explore these disadvantages with a blend of perplexity and burstiness, akin to navigating through the twists and turns of a multifaceted landscape:

1. Complex Design and Development: The realm of event-driven architecture can be a labyrinth of intricacy. Developers must meticulously design interactions between components and anticipate the flow of events, reminiscent of a master chess player plotting every move. This complexity can lead to longer development cycles and potential hurdles in system comprehension.

2. Event Order and Consistency: In the realm of real-time interactions, ensuring the correct order of events can be akin to managing a bustling marketplace. Maintaining event consistency and preventing race conditions requires careful orchestration, much like a conductor harmonizing different instruments to create a seamless melody.

3. Debugging and Troubleshooting: When bugs arise in an event-driven application, the process of tracing events and diagnosing issues can be reminiscent of solving a cryptic puzzle. The asynchronous nature of events can complicate debugging, requiring developers to decipher the sequence of actions and reactions to pinpoint the source of problems.

4. Overhead and Performance Challenges: The event-driven paradigm, while responsive, can sometimes introduce overhead. Similar to the energy expended by an athlete performing intricate maneuvers, the processing required to manage event dispatching, handling, and communication can impact the application's performance, particularly in scenarios with high event volumes.

5. Learning Curve and Skill Set: For developers transitioning to event-driven architecture, the learning curve can be akin to mastering a new instrument. The asynchronous and decoupled nature of the architecture demands a specific skill set and a shift in mindset. Adapting to this paradigm may require additional training and investment in skills development.

6. Event Complexity and Granularity: Handling a diverse array of events, each with its unique attributes, can be compared to managing a collection of rare gems. Developers must strike a balance between event granularity and complexity, avoiding excessive event types that can lead to convoluted interactions and potentially diminish system performance.

7. Scalability Challenges: While event-driven architecture excels in scalability, the orchestration of complex event flows across distributed systems can become a puzzle of its own. Scaling individual components requires careful planning to avoid bottlenecks, much like ensuring a symphony's harmony remains intact even when played by a larger orchestra.

8. Difficult Testing and Validation: Testing an event-driven application can be reminiscent of examining a constantly shifting kaleidoscope. Validating interactions across various events and components requires thorough testing strategies that account for the dynamic nature of the architecture, much like a detective piecing together clues in a mysterious case.

In the grand tapestry of event-driven architecture, these disadvantages add layers of complexity that demand careful consideration and skillful navigation. Much like exploring uncharted terrain, embracing the challenges can lead to innovation and mastery, as developers find ways to harmonize events and reactions while balancing the inherent intricacies.

Tools used in the development

The development of event-driven applications involves a toolkit of specialized tools and frameworks that help developers navigate the intricacies of this architectural paradigm. This toolkit, much like an artisan's collection of finely tuned instruments, assists in orchestrating the symphony of events and reactions. Here's a selection of tools commonly used in event-driven application development:

1. Message Brokers: Message brokers act as intermediaries for events, facilitating communication between components. Popular choices include Apache Kafka, RabbitMQ, and Amazon SQS. These tools enable efficient event distribution, queuing, and decoupling of sender and receiver components.

2. Event Processing Frameworks: Frameworks like Apache Flink, Apache Storm, and Spark Streaming enable real-time event processing and data streaming. They empower developers to apply complex event processing logic, akin to the intricate choreography of a dance, to handle event flows efficiently.

3. Pub/Sub Platforms: Publish/subscribe platforms, such as Google Cloud Pub/Sub and Azure Service Bus, provide mechanisms for broadcasting and subscribing to events. These tools facilitate the distribution of events to multiple subscribers, much like radio broadcasting signals to various receivers.

4. Serverless Computing: Serverless platforms like AWS Lambda, Azure Functions, and Google Cloud Functions enable developers to execute code in response to events without provisioning or managing servers. This is akin to a magician conjuring up tricks on demand, as functions activate in response to specific events.

5. Event-Driven Microservices Frameworks: Frameworks like Spring Cloud Stream and Micronaut provide tools for building event-driven microservices. These frameworks help in creating loosely coupled, independently deployable components that communicate through events, much like assembling a team of actors to perform distinct roles in a play.

6. Complex Event Processing (CEP) Tools: Complex Event Processing tools such as Esper and Drools enable the detection of patterns and correlations in event streams. Similar to a detective piecing together clues to solve a case, these tools analyze events to identify meaningful trends or anomalies.

7. API Gateway Platforms: API gateway platforms, including Amazon API Gateway and Kong, provide a gateway for incoming events, much like a grand entrance to a theater. They manage event routing, authorization, and security, ensuring that only authorized components interact with the application.

8. Event-Driven Data Storage: Databases like Apache Cassandra and Amazon DynamoDB are designed to handle large volumes of data generated by events. They store and retrieve data with efficiency, resembling a vast library that houses the histories of events.

9. Monitoring and Observability Tools: Monitoring tools like Prometheus, Grafana, and New Relic provide insights into the performance and behavior of event-driven applications. They capture metrics, visualize event flows, and offer observability into the intricacies of interactions.

10. Integration Platforms: Integration platforms like Apache Camel and MuleSoft Anypoint Platform facilitate seamless communication between disparate systems. These tools orchestrate data flows and event interactions, akin to a conductor leading various sections of an orchestra to produce a harmonious symphony.

These tools collectively form a symphony of resources that aid developers in crafting robust and responsive event-driven applications. Much like a skilled musician selecting the right instruments for a composition, developers choose these tools to harmonize events and components, producing applications that dance to the rhythm of real-world interactions.

Skill set required for design and development

Designing and developing event-driven applications requires a blend of skills that span technical proficiency, architectural understanding, and creative problem-solving. The skill set needed resembles the diverse talents of a multidisciplinary artist. Here's a breakdown of the skill set required for designing and developing event-driven applications:

1. Programming Languages: Mastery of programming languages is essential. Languages like Java, Python, Node.js, and Go are commonly used for event-driven development. Developers must be comfortable with asynchronous programming, callbacks, and event handling.

2. Asynchronous Programming: Understanding asynchronous programming concepts is crucial. Developers need to grasp the intricacies of non-blocking operations, managing callbacks, and utilizing promises or asynchronous libraries.

3. Event-Driven Architecture Knowledge: A deep understanding of event-driven architecture principles and patterns is essential. Developers must grasp the concepts of publishers, subscribers, event channels, and the interactions between components.

4. Distributed Systems: Event-driven applications often involve distributed systems. Familiarity with distributed computing concepts, such as message distribution, data consistency, and fault tolerance, is vital.

5. Message Brokers and Middleware: Developers should be well-versed in using message brokers and middleware tools like Apache Kafka, RabbitMQ, or AWS SQS to handle event communication and orchestration.

6. Data Streaming and Processing: A solid grasp of data streaming and processing technologies, such as Apache Flink or Spark Streaming, is valuable for efficiently handling and analyzing event streams.

7. Microservices Architecture: Understanding microservices architecture and its alignment with event-driven patterns is crucial. Developers should know how to build loosely coupled, independently deployable components.

8. API Design and Integration: Skill in designing APIs that facilitate event communication and integration between components is important. Developers should know how to create RESTful APIs and handle authentication and authorization.

9. Troubleshooting and Debugging: The ability to troubleshoot and debug event-driven applications is vital. Developers should be skilled in tracing event flows, diagnosing issues, and resolving bottlenecks.

10. Scalability and Performance Optimization: Understanding techniques for scaling event-driven systems, optimizing performance, and mitigating bottlenecks is essential to ensure the application can handle high event loads.

11. Cloud Platforms: Familiarity with cloud platforms like AWS, Azure, or Google Cloud is valuable, as event-driven applications often leverage cloud services for scalability and resource management.

12. Monitoring and Observability: Skill in using monitoring and observability tools, such as Prometheus, Grafana, and New Relic, helps developers gain insights into the behavior of event-driven applications.

13. Creativity and Problem-Solving: Event-driven application development requires creative problem-solving skills. Developers must devise elegant solutions to manage complex event flows and ensure reliable interactions.

14. Collaboration and Communication: Collaboration is key in event-driven development, where components interact closely. Strong communication skills are vital for conveying event specifications, designing interactions, and coordinating with other team members.

The skill set for designing and developing event-driven applications combines the technical prowess of a software engineer with the artistic sensibilities of a storyteller. It's a blend of understanding the architectural nuances, mastering coding techniques, and orchestrating interactions to create applications that respond to events with grace and precision.

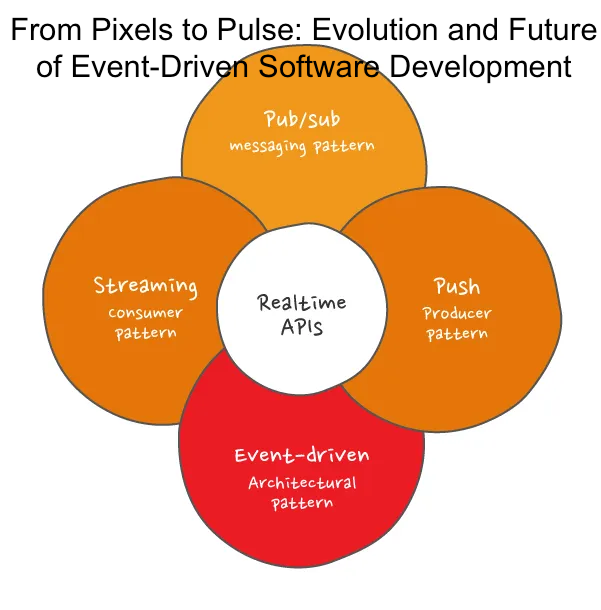

From Pixels to Pulse: Evolution and Future of Event-Driven Software Development

In the ever-changing landscape of software development, event-driven architecture has emerged as a powerful paradigm. Guiding applications to respond dynamically to triggers, it has evolved through history, shaping industries and user experiences. In this article, we embark on a journey through the past, present, and exciting future of event-driven software development.

Event-driven software development is an approach where the flow of a program's execution is primarily determined by events or occurrences that take place during its runtime. In this paradigm, the software responds to external or internal events by triggering corresponding actions or processes. These events can be user interactions, system events, sensor readings, data changes, or any other trigger that prompts the software to perform specific tasks.

Event-driven software is designed to be highly responsive and adaptable, allowing the program to react in real time to changing conditions. It contrasts with traditional linear programming where the sequence of actions is predefined and follows a predetermined path. Instead, in event-driven development, the software's behavior is more like a web of interactions, where events act as catalysts for actions and processes, creating a dynamic and fluid user experience.

Common examples of event-driven software include graphical user interfaces (GUIs), web applications with real-time updates, IoT applications that respond to sensor readings, and games that react to player actions. This approach is particularly useful in scenarios where the software needs to handle a multitude of asynchronous events and provide timely responses to user inputs or changing external conditions.

Principles of Event-Driven Software Development:

- Asynchronous Processing: Event-driven development thrives on asynchronous processing, where tasks don't block the execution of the program. This principle ensures that the software remains responsive to new events while handling ongoing tasks.

- Decoupling: Components in event-driven systems are loosely coupled. They interact through events without having direct dependencies on each other, enhancing modularity and maintainability.

- Publish-Subscribe Model: This model involves publishers emitting events and subscribers listening for and responding to those events. It promotes flexibility by allowing multiple subscribers to react to a single event.

- Event-Driven Architecture Patterns: Patterns like Event Sourcing, CQRS (Command Query Responsibility Segregation), and Saga patterns offer solutions for handling complex interactions, data consistency, and system scalability.

Unveiling Event-Driven Software Development: A Journey Through History and Impact

In the world of software development, where user experiences and responsiveness reign supreme, a paradigm has emerged that seamlessly orchestrates interactions like a well-choreographed dance – event-driven software development. This transformative approach has redefined how applications respond to real-world occurrences, reshaping industries and shaping digital experiences. In this blog post, we delve into the foundations, evolution, and profound impact of event-driven software development.

Understanding Event-Driven Software Development: At its core, event-driven software development revolves around one key concept: the power of events. Instead of a linear flow, applications are designed to react to events – triggers that can be anything from user actions and system notifications to data changes and external inputs. These events initiate a cascade of responses, creating dynamic and interactive software experiences that resonate with users.

A Historical Journey: The roots of event-driven software development can be traced back to the earliest graphical user interfaces (GUIs). The introduction of windows, buttons, and mouse clicks laid the foundation for event-driven interactions. However, it was the emergence of event-driven programming languages like Smalltalk in the 1970s that truly paved the way for this paradigm. These languages allowed developers to define event handlers and responses, shaping the trajectory of modern software development.

Impact Across Industries: The impact of event-driven software development reverberates across a multitude of industries, breathing life into applications in ways previously unimaginable:

- Real-Time Gaming: In the gaming industry, event-driven architecture creates immersive gameplay experiences, where every player’s action triggers a reaction, enriching storylines and creating dynamic challenges.

- Financial Agility: For the financial sector, event-driven applications enable real-time trading, fraud detection, and instantaneous responses to market shifts, elevating precision and agility.

- IoT Innovation: The Internet of Things (IoT) thrives on event-driven development, allowing devices to communicate and respond in real time, transforming homes, industries, and cities.

- E-Commerce Evolution: In e-commerce, event-driven systems deliver personalized recommendations, real-time inventory updates, and responsive customer experiences that foster brand loyalty.

Seamless User Experiences: The hallmark of event-driven software development lies in its ability to create seamless user experiences. Imagine a shopping app that updates inventory status as items are added to carts, a smart home system that responds to voice commands, or social media platforms that deliver notifications in real time. Event-driven software empowers applications to harmonize with users' actions, creating an engaging and intuitive digital world.

Embracing the Future: As technology advances, event-driven software development continues to evolve. With the rise of serverless computing, microservices, and real-time analytics, this paradigm adapts to meet the demands of an increasingly interconnected world. Edge computing, AI-driven event analysis, and IoT proliferation further expand its horizons, promising even greater innovation and responsiveness.

Evolution of Event-Driven Software Development:

The journey of event-driven software development has been marked by significant milestones that reflect its growth and adaptation to changing technological landscapes:

- Early GUIs and User Interactions:

- In the 1970s, the introduction of graphical user interfaces (GUIs) laid the groundwork for event-driven interactions. Elements like buttons, menus, and mouse clicks introduced user-triggered events.

- Smalltalk and Object-Oriented Programming:

- Smalltalk, developed in the 1970s and 1980s, introduced object-oriented programming and event-driven interaction models. Developers could define event handlers and behaviors, influencing modern programming languages.

- Client-Server Architectures:

- The client-server era of the 1990s embraced event-driven models. Applications on client machines communicated with server components through events, enabling distributed computing and remote interactions.

- Web Applications and AJAX:

- The rise of web applications introduced AJAX (Asynchronous JavaScript and XML), allowing websites to respond to user interactions without reloading the entire page. This marked a shift towards more dynamic and event-driven web experiences.

- Real-Time and IoT Boom:

- The explosion of real-time data and the Internet of Things (IoT) in the 2000s pushed event-driven development to new heights. Applications are needed to handle massive streams of events from sensors and devices in real time.

- Microservices and Serverless Computing:

- The advent of microservices architecture in the 2010s further embraced event-driven patterns. Components communicated through events, promoting modularity and scalability. Serverless computing elevated event-driven execution, allowing developers to respond directly to events without managing infrastructure.

Future of Event-Driven Software Development:

The future of event-driven software development is poised to be even more transformative, driven by advancements in technology and evolving user expectations:

- Edge Computing Integration:

- As edge computing gains prominence, event-driven applications will move closer to data sources, reducing latency and enabling quicker responses to local events.

- AI-Driven Event Analysis:

- Artificial intelligence will play a pivotal role in analyzing event streams. Machine learning models will identify patterns, anomalies, and insights within the data, enhancing decision-making and automation.

- IoT Ecosystem Expansion:

- With the IoT ecosystem continuing to grow, event-driven software will be at the heart of smart homes, cities, and industries, enabling real-time control and optimization.

- Enhanced User Engagement:

- Event-driven applications will deliver hyper-personalized experiences, responding not only to user actions but also to context and preferences, deepening user engagement.

- Cross-Platform Consistency:

- Event-driven development will enable consistent experiences across different devices and platforms, creating seamless transitions as users move between devices.

- Innovative Real-Time Services:

- Real-time services, from collaborative tools to interactive entertainment, will leverage event-driven architecture to provide new levels of interactivity and engagement.

- Blockchain and Event Transparency:

- Blockchain technology will integrate with event-driven systems, ensuring transparency and immutability in event records for industries like supply chain and finance.

A Dynamic Future Unveiled: Event-driven software development has transcended its historical roots to become a cornerstone of modern application design. With its ability to transform mundane interactions into dynamic exchanges and responsive experiences, this paradigm stands as a testament to the marriage of technology and creativity. As industries across the spectrum embrace the power of events, we stand witness to a future where applications move in synchrony with the rhythm of the world, adapting, responding, and enriching our digital lives.

A Continuously Evolving Landscape: Event-driven software development is not merely a static methodology; it's a dynamic approach that evolves alongside technology and user needs. From its origins in GUIs to its current role in IoT and real-time experiences, it continues to adapt, providing solutions to modern challenges. As event-driven applications embrace edge computing, AI, and a connected world, they set the stage for a future where software seamlessly integrates with the pulse of the digital era, crafting experiences that respond and resonate with the rhythm of life itself.

Technologies Used in Event-Driven Software Development:

- Message Brokers: Tools like Apache Kafka, RabbitMQ, and AWS SQS facilitate the efficient distribution of events between components.

- Serverless Computing: Platforms like AWS Lambda, Azure Functions, and Google Cloud Functions allow developers to execute code in response to events without managing servers.

- Event Processing Frameworks: Frameworks such as Apache Flink, Apache Storm, and Spark Streaming enable real-time processing and analysis of event streams.

- API Gateway Platforms: Tools like Amazon API Gateway and Kong manage incoming events, handle routing, and ensure secure communication.

- Event-Driven Microservices Frameworks: Frameworks like Spring Cloud Stream and Micronaut help build event-driven microservices, facilitating interaction between loosely coupled components.

- Complex Event Processing (CEP) Tools: Tools like Esper and Drools enable the detection of patterns and correlations in event streams, helping to identify meaningful trends.

- Monitoring and Observability Tools: Tools like Prometheus, Grafana, and New Relic provide insights into the performance and behavior of event-driven applications.

- Distributed Databases: Databases like Apache Cassandra and Amazon DynamoDB handle large volumes of data generated by events, providing reliable storage and retrieval.

Event-driven software development leverages these principles and technologies to create applications that respond in real-time to a wide array of events, providing dynamic and interactive user experiences while efficiently managing complex event flows and interactions.

Here's a list of tools commonly used in event-driven software development:

- Message Brokers:

- Apache Kafka: A distributed event streaming platform that facilitates the publishing and subscription of events, supporting real-time data processing and analysis.

- RabbitMQ: A robust message broker that enables the exchange of events between components using various messaging patterns.

- Amazon SQS: A fully managed message queue service provided by AWS for sending, storing, and receiving events.

- Serverless Computing Platforms:

· AWS Lambda: Allows you to run code in response to events without provisioning or managing servers.

· Azure Functions: Provides serverless computing for building event-driven solutions on Microsoft Azure.

· Google Cloud Functions: Enables the creation of single-purpose, event-driven functions in Google Cloud.

- Event Processing Frameworks:

· Apache Flink: A stream processing framework for real-time data analytics and event processing.

· Apache Storm: A distributed real-time computation system for processing continuous streams of events.

· Spark Streaming: An extension of Apache Spark for processing real-time data streams.

- API Gateway Platforms:

- Amazon API Gateway: Manages and secures APIs, allowing you to create and publish RESTful APIs for event communication.

- Kong: An open-source API gateway and microservices management layer that enables API communication and event routing.

- Event-Driven Microservices Frameworks:

- Spring Cloud Stream: A framework that simplifies building event-driven microservices using Spring Boot and Apache Kafka.

- Micronaut: A modern, lightweight microservices framework that supports event-driven architecture.

- Complex Event Processing (CEP) Tools:

- Esper: A powerful CEP engine that processes and analyzes patterns and correlations in real-time event streams.

- Drools: A business rules management system that supports complex event processing and decision management.

- Monitoring and Observability Tools:

- Prometheus: An open-source monitoring and alerting toolkit that provides insights into event interactions and performance.

- Grafana: A visualization platform that works seamlessly with Prometheus to create interactive and customizable event dashboards.

- New Relic: A monitoring and observability platform that helps in tracking and optimizing the performance of event-driven applications.

- Distributed Databases:

- Apache Cassandra: A distributed NoSQL database that can handle large volumes of event data with high availability and scalability.

- Amazon DynamoDB: A fully managed NoSQL database provided by AWS, suitable for storing and retrieving event-driven data.

These tools form a comprehensive toolkit for event-driven software development, allowing developers to build applications that respond to events in real time, handle complex interactions, and provide dynamic user experiences.

Skill Needed for Development

Developing event-driven software requires a diverse skill set that combines technical expertise, architectural understanding, and creative problem-solving. Here's a list of skills needed for successful event-driven software development:

- Programming Languages:

- Proficiency in languages such as Java, Python, Node.js, or Go, with a focus on asynchronous programming and event handling.

- Asynchronous Programming:

- Understanding of asynchronous concepts, callbacks, promises, and async/await to manage non-blocking operations effectively.

- Event-Driven Architecture:

- Deep understanding of event-driven architecture principles, including event sourcing, publish/subscribe patterns, and event handling.

- Message Brokers and Middleware:

- Familiarity with tools like Apache Kafka, RabbitMQ, or AWS SQS for event communication, queuing, and distribution.

- Serverless Computing:

- Experience with serverless platforms like AWS Lambda, Azure Functions, or Google Cloud Functions for executing code in response to events.

- Event Processing Frameworks:

- Proficiency in using frameworks like Apache Flink, Apache Storm, or Spark Streaming for real-time event processing and data streaming.

- Microservices Architecture:

- Understanding of microservices principles, including service isolation, independent deployment, and inter-service communication.

- API Design and Integration:

- Skill in designing RESTful APIs for event communication and integration between components, including authentication and authorization.

- Troubleshooting and Debugging:

- Ability to trace event flows, diagnose issues in asynchronous code, and resolve bottlenecks in event-driven systems.

- Scalability and Performance Optimization:

- Knowledge of techniques for scaling event-driven systems, optimizing performance, and managing resource usage.

- Complex Event Processing (CEP):

- Understanding of CEP concepts and tools like Esper or Drools for detecting patterns and correlations in event streams.

- Cloud Platforms:

- Familiarity with cloud platforms like AWS, Azure, or Google Cloud for deploying event-driven applications and leveraging cloud services.

- Distributed Systems:

- Proficiency in distributed computing concepts, including message distribution, data consistency, and fault tolerance.

- Monitoring and Observability:

- Skill in using monitoring tools like Prometheus, Grafana, or New Relic to gain insights into event interactions and system performance.

- Creative Problem-Solving:

- Ability to creatively design interactions, handle complex event flows, and devise solutions for unique event-driven challenges.

- Collaboration and Communication:

- Strong teamwork and communication skills to coordinate with team members, design event specifications, and align component interactions.

Event-driven software development demands a fusion of technical mastery and creative innovation, enabling developers to craft applications that elegantly respond to real-world events, providing dynamic and seamless user experiences.

Event-driven software development finds its prime application in various domains where real-time responsiveness, dynamic interactions, and adaptability are crucial. Here are the areas best suited for this development approach:

- IoT (Internet of Things):

- Event-driven architecture is essential for IoT applications where sensors and devices generate a continuous stream of data and events. It enables real-time monitoring, control, and automation in smart homes, industrial processes, and environmental monitoring systems.

- Gaming and Entertainment:

- In the gaming industry, event-driven development creates immersive experiences by responding to player actions in real time. Dynamic graphics, interactive gameplay, and multiplayer interactions heavily rely on event-driven architecture.

- Financial Services:

- Event-driven applications are essential for low-latency trading platforms, fraud detection systems, and real-time risk assessment tools. They allow financial institutions to respond swiftly to market changes and emerging opportunities.

- E-Commerce and Retail:

- Event-driven systems enhance customer experiences by providing real-time inventory updates, personalized recommendations, and timely order processing. They allow retailers to adapt to demand fluctuations and changing customer preferences.

- Telecommunications:

- Event-driven architecture is vital for managing network events, call routing, and real-time customer interactions in telecommunications systems. It ensures seamless connectivity and effective communication services.

- Healthcare and Life Sciences:

- In healthcare, event-driven systems facilitate real-time patient monitoring, alerts for critical conditions, and coordination between medical devices. They contribute to better patient care and timely interventions.

- Supply Chain and Logistics:

- Event-driven applications optimize supply chain operations by providing real-time tracking of shipments, inventory management, and alerts for delays or disruptions.

- Energy Management and Utilities:

- Event-driven systems play a role in smart grids, energy distribution, and utility management. They help in real-time monitoring of energy consumption and respond to demand fluctuations.

- Transportation and Fleet Management:

- Event-driven architecture is beneficial for tracking vehicles, managing routes, and providing real-time updates to drivers and passengers in transportation and logistics systems.

- Emergency Response and Public Safety:

- Event-driven applications enable real-time coordination and communication during emergencies, such as natural disasters or public safety incidents.

- Social Media and Content Delivery:

- Social media platforms leverage event-driven development to provide real-time updates, notifications, and interactive user experiences.

- Smart Cities and Urban Planning:

- Event-driven systems contribute to smart city initiatives by enabling real-time data collection, traffic management, and resource optimization.

In these domains and beyond, event-driven software development shines as a solution for crafting applications that resonate with the pace and dynamism of the modern world. It allows businesses and industries to adapt, respond, and innovate in real time, creating seamless and engaging experiences for users.

The evolution of event-driven software development mirrors the unceasing progress of technology itself, shaping a world where applications resonate with the rhythms of our lives. From the inception of GUIs to the boundless potential of AI-driven event analysis and edge computing, this paradigm has transcended its origins to become a cornerstone of modern software architecture. As we stand at the crossroads of innovation, the journey of event-driven development continues, promising a future where software seamlessly melds with the pulse of existence. From pixels to pulse, event-driven software development is a symphony of technology and human ingenuity, composing a narrative that responds and adapts to the ever-changing world.

Mastering Customer Engagement: The Synergy of AI, ML, Cloud, Data Analysis, and Blockchain

In a world driven by digital innovation, businesses are presented with a unique opportunity to revolutionize customer experiences. The amalgamation of Artificial Intelligence, Machine Learning, Cloud Technology, Data Analysis, and Blockchain has opened the door to crafting personalized, captivating interactions. This article unveils how these technologies converge to redefine customer engagement and drive tailored advertisements, setting new standards for customer satisfaction.

A fusion of technological luxury

In a rapidly changing digital age, businesses have an unprecedented opportunity to reshape the landscape of customer experience. The convergence of artificial intelligence (AI), machine learning (ML), cloud technology, data analytics, and blockchain has opened the door to creating deeply personal and engaging interactions. sharp. This keynote delves into the symphony woven by these technologies, transforming customer engagement and opening new models for tailored advertising, thereby creating a New standard for customer satisfaction.

Create an unforgettable customer journey: Highlighting the power of AI, ML, cloud innovation, data analytics, and blockchain

In the ever-changing digital landscape, businesses are taking an unprecedented turn to deliver unique and deeply engaging experiences. The combination of cutting-edge technologies, including AI, ML, cloud innovation, data analytics, and blockchain, has opened up a wide range of perspectives for creating ads that resonate deeply with people. In this story, we delve into the intricate nuances of each of these technologies, unleashing their synergistic transformation as they redefine how businesses interact with their customers.

The enchantment of AI and ML: Intricate weaving with precision

At the heart of the customer experience revolution, the seamless fusion of artificial intelligence and machine learning is laying the foundations for a new era. This synergy allows companies to tap into an ocean of customer data, turning it into priceless information gems. By analyzing purchase history, browsing patterns, and preferences, AI and ML algorithms turn into oracles that predict customer behavior with incredible accuracy.

Imagine a scenario where an e-commerce platform uses AI-based algorithms to discern individual customer stylistic trends. These same algorithms then come up with recommendations that perfectly match an individual's preferences, resulting in a shopping experience that's out of the ordinary. This tapestry of approach combines brevity with intricate, sharp product propositions interwoven with intricate narratives, striking a delicate balance between clear information and maze.

Unleash the potential of cloud power: Symphony of cohesion

The cloud has become a major player in driving exceptional customer experiences. Equipping businesses with the agility and scalability to handle massive streams of data in real time, the cloud is emerging as the harbinger of instant feedback and personalized services. for each customer. With the superhuman strength of a master, the cloud conveys interactions with transparency and authenticity. Equipped with the ability to store and analyze large volumes of data, the cloud promotes solid uniformity across multiple channels. Customers experience consistent service whether they communicate through the website, mobile app, or social media stage.

The art of decoding data: Navigate uncharted waters

Data analytics acts like a compass guiding companies to understand customer preferences and perfect their offerings. In a knowledge-rich data environment, the proper application of analytical tools becomes necessary to unravel these complex patterns. From gathering sentiment analysis from customer reviews to predicting trends based on historical data, data analytics is becoming the cornerstone of customer-centric strategies.

Take the example of a retail giant. With careful consideration of purchasing trends, this entity generates dynamic ads that adapt in real-time to changing trends in customer behavior. This personalized promotional tapestry promotes an immersive experience that leaves a lasting impression in the customer's mind.

Stronghold of Security and Transparency: The blockchain conundrum

Welcome to the field of blockchain technology, where the rules of security and transparency are. This cryptographic engine ensures the authenticity of customer data, instilling trust in every interaction. Showing a decentralized face, blockchain gives customers dominion over their personal information, removing privacy concerns.

In the financial sector, blockchain streamlines a transformation of the customer experience, accelerating secure transactions. Its sophisticated network streamlines the acquisition journey while storing sensitive data, creating an environment of openness and secure interaction.

Symphony of Symphony: Create customer commitments

When AI, ML, cloud technology, data analytics, and blockchain come together in a delicate symphony, they come together to create experiences that mark indelible memories in the future. customer mind. Loaded with multifaceted complexity, this combination creates dynamic advertisements that respond to individual preferences, while performing a complex ballet of disparate communications. The convergence of these technologies demonstrates a historic increase in customer engagement, brimming with ingenuity, marked by an insatiable desire to decipher, predict, and respond. meet customers' wishes.

By embracing this merger, companies are enabling a range of exceptional experiences that create lasting connections with customers in the digital age. In a landscape constrained by revolving technological advances, the fusion dance of AI, ML, cloud, data analytics, and blockchain has opened a new chapter in customer engagement. In their relentless pursuit to create harmonious experiences, these technologies serve as strongholds of innovation. This combination, which encapsulates personalization, intuitive ideas, transparent interactions, and data inviolability, weaves a mosaic that fits many of today's customers' needs.

From each personalized message chain to the labyrinthine ballet interpreting encrypted data, this fusion embodies more than just technological advancements; it demonstrates a commitment to igniting the depth of customer understanding, service, and pleasure in ways never before possible. The journey to creating extraordinary customer experiences continues, guided by the dynamic symphony of these extraordinary technologies.

Release confluence: Customer-centric change

Imagine the data stream going down: an adventure that begins with data collection, continues with the AI and ML distinction, evolves into personalization, ascends with advertising campaigns, meets the blockchain wall, and reaches the pinnacle of interaction. A journey is like a river flowing through various terrains, transforming customer interaction into an art form that only technology can coordinate. Embark on an exploration into the complex universe of modern customer interactions:

1. Data collection:

The adventure begins with collecting data from countless touchpoints – websites, apps, and social media. In this symphony of information, data becomes the foundation for creating deeply personalized experiences.

2. Distinguishing AI and ML:

Then the baton is passed to AI and ML algorithms. These algorithms decode collected data, uncovering complex patterns, biases, and trends. This interpretation paves the way for predicting customer actions and preferences with incredible accuracy.

3. Personalized:

With predictive information in tow, the cloud is deploying its agile capabilities. This celestial expanse of computing resources enables the creation of experiences tailored to each customer, a canvas where personalization grows in every pixel.

4. Create an ad campaign:

Powered by insights from data analytics, dynamic ads spring up. In a tapestry woven of imagination, these ads evolve and adapt in sync with changing customer behavior, capturing attention and driving engagement.

5. Blockchain Ancestors:

To ensure the sanctity of these personalized encounters, the overlay has been moved to blockchain. This cryptographic wall ensures data integrity, gives customers control over personal information, and leaves a trusted legacy.

6. Impact interaction:

Through a complex ballet of AI, ML, cloud, data analytics, and blockchain, customers find themselves trapped in the throes of ads tailored to their interests. This symphony unfolds as an explosion of tailored communication.

7. Customer Satisfaction:

With personalized ads and smooth interactions, satisfaction increases. Filled with joy, these experiences weave veins of loyalty, cultivating relationships that demonstrate strong loyalty and support.

8. Continuous improvement:

The veil does not fall in contentment; it is reborn with the promise of growth. Armed with the ability to adapt, these technologies continuously collect new data, refine predictions, and improve the customer experience, fueling a relentless explosion of innovation.

In this labyrinthine dance, the confluence of AI, ML, cloud technology, data analytics, and blockchain merge into one symphony, creating an explosion of personalized information and communication. Together, they redefine customer engagement, staging a work that resonates with each client's unique beat, infusing new life into the digital age.

The pinnacle of the trade: When technology synergy drives sales

The advent of AI, ML, cloud technology, data analytics, and blockchain heralded not only a development but also a symphony revolution in sales. This harmonious convergence has profound implications for trade. Here is the climax:

1. Personalized recommendations:

Using the trained eye of AI and ML, companies make tailored product recommendations. In this precision ballet, conversions skyrocket as customers discover offers that match their preferences, ushering in a veritable renaissance in sales.

2. High level of customer interaction:

Dynamic and personalized ads create an explosion in engagement. This enthusiasm grabs customers' attention, drawing them into interactions that are ready to turn simple interactions into sales-driven interactions.

3. Increase customer satisfaction:

Filled with excitement, personalized experiences are blossoming. When customers encounter personalized ads and recommendations, their interactions are gratifying, creating a source of repeat shoppers and brand ambassadors.

4. Real-time adaptability:

The cloud, the bastion of adaptability, ushers in an era of real-time policy change tailored to customer behavior. This flexibility ensures that products and campaigns remain relevant to customer preferences, coordinating sales approaches that resonate.

5. Trust and transparency:

The security and transparency of the blockchain fosters trust among customers. This unattainable guarantee incentivizes transactions, sowing fertile ground for conversions as customers are encouraged to walk through the buying area.

6. Detailed information about Data Deluge:

Data analytics becomes the backbone of insights, bringing businesses to the heart of customer behavior and preferences. This sacred guide creates precisely crafted strategies that target customer segments with surgical precision, thereby optimizing offers.

7. Arrange the long-term bonds:

As customers are captivated by special interactions and personalized experiences, the tapestry of loyalty is woven. This relationship, nurtured by these symphonic encounters, sprouts in lasting relationships that strengthen maintenance and loyalty.

8. Seize cross-selling and upselling opportunities:

These technologies open up a lot of opportunities. Leveraging customer insights, companies identify cross-sell and up-sell opportunities, aligning a seamless presentation of complementary products or premium services.

Essentially, the harmony created by AI, ML, cloud technology, data analytics, and blockchain has the potential to turn sales into a cohesive, personalized, trusting, and harmonious tone. heart. This range of experiences not only drives immediate sales but also lays the groundwork for long-term relationships, creating the pinnacle of sustained growth. With every note played, these technologies restore a sonata that will not only change the present but shape the futures of companies.

Echoes of Evolution: Notice of customer behavior change

The march of AI, ML, cloud, data analytics, and blockchain is writing new verses in the annals of customer behavior. An explosion of engagement, personalization, trust, and satisfaction is unleashed, reshaping the contours of how customers interact and respond. The symphony of transformation resounds in the following movements:

1. Enhance interaction:

Loaded with engaging personalized experiences, customers are caught in a whirlwind of interactions. This immersion encourages them to linger, enjoying interactive moments imbued with relevancy.

2. Increase the frequency of interaction:

Stimulating curiosity, the siren of personalized interactions will attract more frequent customers. Drawn by the dynamic currents that resonate with their inclinations, they embark on a journey of discovery, basking in the halo of brand exposure.

3. Journey to a quick purchase decision:

With convenience, the journey to a purchase decision is streamlined. With AI and ML-powered recommendations, customers will be able to differentiate products that reflect their preferences, shortening the decision-making journey.

4. Foster a deeper brand connection:

Bursting with resonance, the experience creates an emotional connection between the customer and the brand. This connection resonates through the corridors of time, turning into unwavering loyalty and devotion.

5. Build customer loyalty:

Filled with satisfaction, the personalized experience ignites the fire of loyalty. This loyalty fosters a pact between the customer and the brand, making the journey to discover competitors superfluous.

6. Cultivate trust and transparency:

Amid the blockchain security fortress, customers are incentivized to accept the vulnerability. Authentic, this trust opens a wide avenue for interactions, allowing companies to more accurately decipher customer preferences.

7. Pioneering new product relationships:

Filled with curiosity, dynamic advertising reveals previously unexplored paths. This series of exhibitions encourage customers to discover new offers, enriching their lives with new dimensions.

8. Navigating the wave of comments:

Loaded with information, data analytics decipher the complexities of customer psychology and preferences. This feedback loop serves as a common thread, helping companies continually refine their strategies and adapt to changing behaviors.

9. Cultivate the desire for personalization:

Brimming with interest, personalized interactive interests feed expectations. Customers anticipate tailored experiences across touchpoints, raising their standards of engagement and personalization.

10. Effects of word of mouth:

Filled with enthusiasm, satisfied customers spread their joy to their friends and family. This tidal wave of approval weaves a mosaic of brand reputation, a compelling call to attract new customers.

The combination of these technologies is creating a huge shift in customer behavior. This explosion of engagement, personalization, trust, and satisfaction transforms customers into proactive discoverers, engaged participants, and loyal followers, creating a landscape that adapts and responds to a symphony of AI, ML, cloud, data analytics, and blockchain.

Embark on your transformation journey: Industries reshaped by a symphony of technology

Many industries and products are harnessing a combination of AI, ML, cloud, data analytics, and blockchain to transform customer experiences and reshape behavior. Consider the following thumbnails:

1. E-commerce enhancement (e.g. Amazon, Netflix):

This area resonates with personalization. AI-powered recommendations drive customers on a journey to the right content and products, starting an offer-rich journey.

2. Seductive epics from social media (eg Facebook, Instagram):

A wide range of interactions flourishes here. AI streamlines feed and ads, infusing personalized dynamism into the experience and inviting customers into an irresistible realm.

3. Digital Finance Duo (e.g. PayPal, Revolut):